Posted on

February 5, 2026

•

3 minutes

•

581 words

The bottleneck has moved from writing code to verifying it. CI must move with it: run where agents run, and run fast.

It’s the same pattern everywhere: we’re changing how we architect systems, how we run CI, and how we build in general so that AI coding agents can work effectively. I wrote about one side of that

a few weeks ago (architecture for disposable software). Shifting CI left is another.

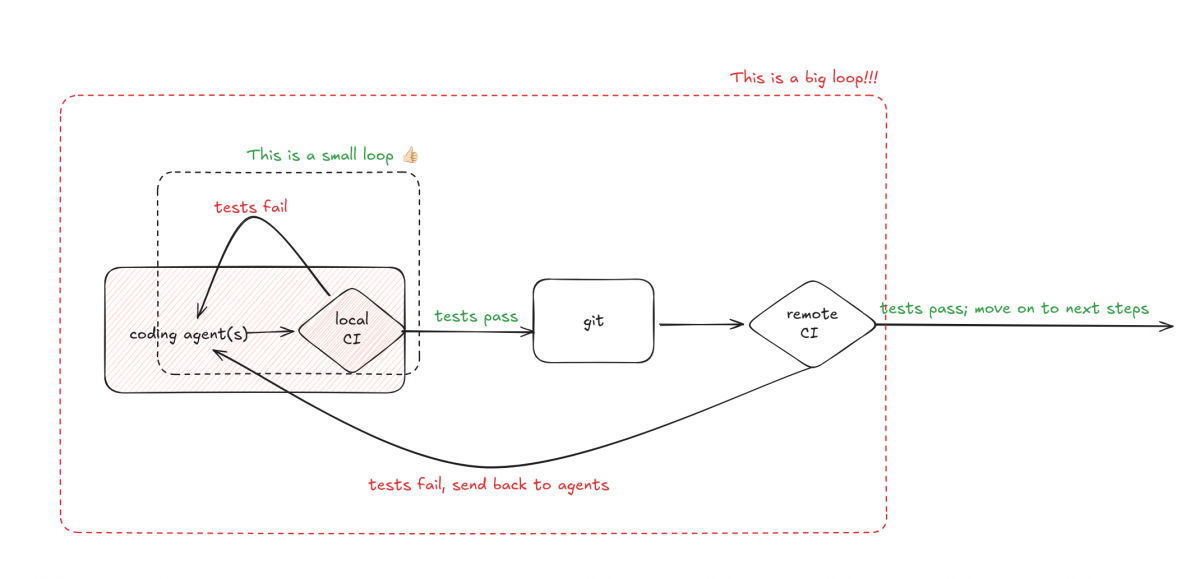

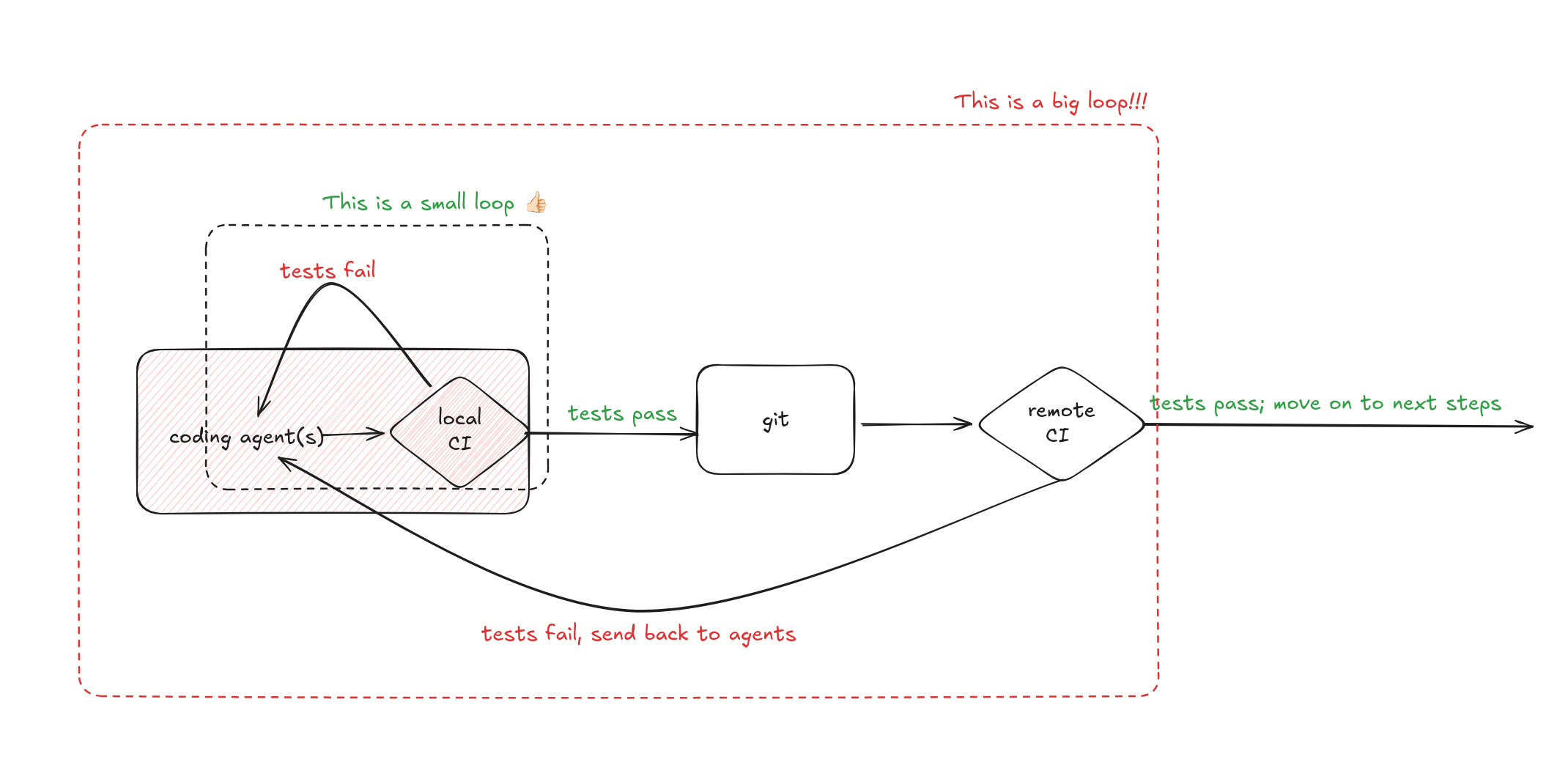

What we want is a tight feedback loop: the agent makes a change, runs CI locally, gets a pass or fail, and adjusts. No push, no wait for the remote pipeline. A picture is worth a thousand words. Here’s one.

The bottleneck has moved from coding to verification.

We used to use AI to assist us. Now we’re optimizing everything so AI agents can be more effective, and the bottleneck has moved.

Previously it was fine to code, push to Git (or whatever source control), and wait for CI to kick in. Most of the time was still spent coding, so a few minutes of feedback delay was acceptable.

Now, coding is the least of the problem. We want faster feedback. More precisely: agents need faster feedback so they can iterate without burning context and time on round-trips to a remote pipeline.

We all agree CI is crucial for fully autonomous agents. The question is where it runs and how fast it needs to be.

Agents must run CI themselves, and CI must be fast.

1. Agents should be able to run CI by themselves.

The existing CI (in the cloud, on merge, etc.) should still be there. Think of it like client-side validation vs server-side validation: we wouldn’t remove server-side validation just because we added client-side checks. Local or agent-run CI is the “client-side” check; pipeline CI remains the source of truth and the final gate. Agents that can run the same checks locally before they push break fewer builds and stay within the boundaries we set.

2. It should be fast. Really fast.

When the bottleneck shifts from “writing code” to “verifying code,” slow CI is a hard cap on agent throughput. Agents need to try things, get a red/green signal, and adjust, all in the same session, without losing state. Multi-minute pipelines are a non-starter for that loop.

Portable pipelines and hermetic builds address both requirements.

Goal 1 (agents running CI themselves with the same or equivalent checks they’ll see in the pipeline) is exactly the kind of problem Dagger

is built for. CI as code, runnable anywhere: on a developer machine, in an agent’s sandbox, or in the cloud. Same pipeline definition, different execution environment. That gives you “client-side” and “server-side” validation with one specification.

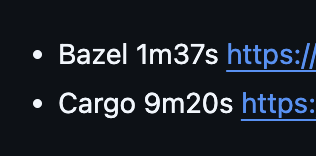

Goal 2 (speed) is where Bazel and the ecosystem around it shine. Hermetic builds, caching, and minimal recomputation mean you only run what changed. For an agent that’s making small, targeted edits, that can turn a 10-minute build into a 30-second one. When verification is the bottleneck, that kind of tooling stops being optional.

An example: OpenAI’s Codex used Bazel to cut build time from 9 minutes to just over 1 minute:

So @OpenAI started building Codex with @bazelbuild

The results speak for themselves pic.twitter.com/bnxn7obUYS

— Steeve Morin (@steeve) January 12, 2026

Shift left started as a way to find bugs earlier. In the AI era, it’s also the way we let agents work safely and quickly, without removing the safety net of the pipeline. Same principle, new reason to care.