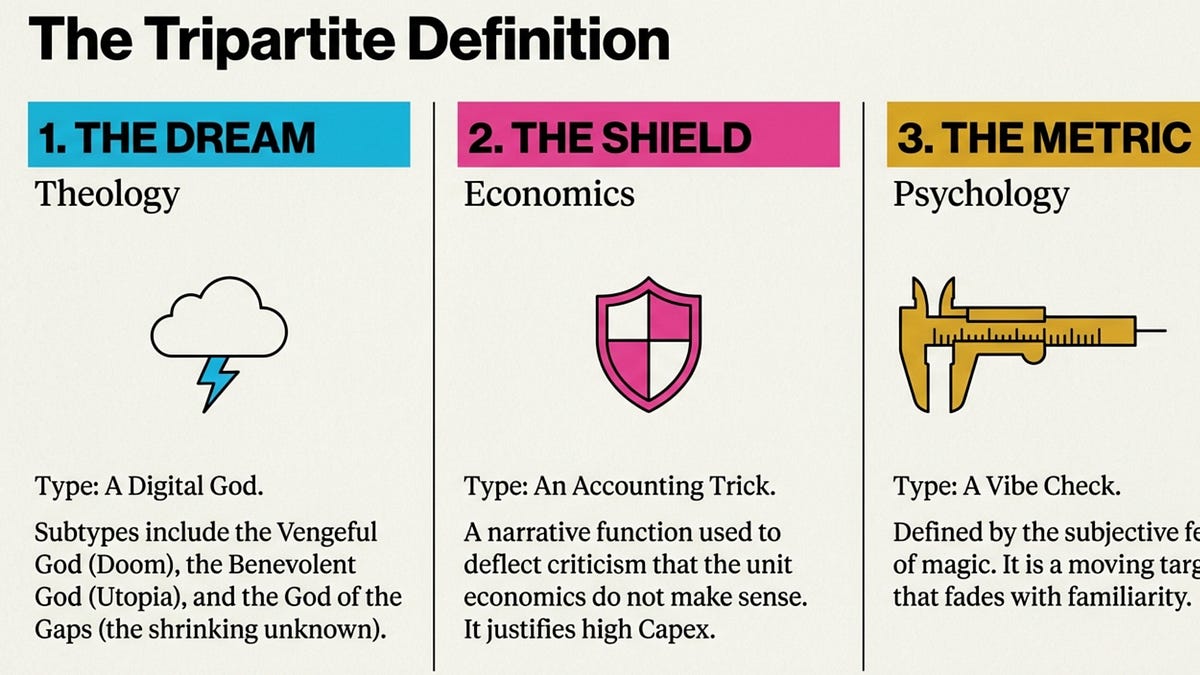

Modern AGI discourse mixes three different concepts into a trench coat, and each has its own timeline. We have a Digital God, an Accounting Trick, and a Vibe Check.

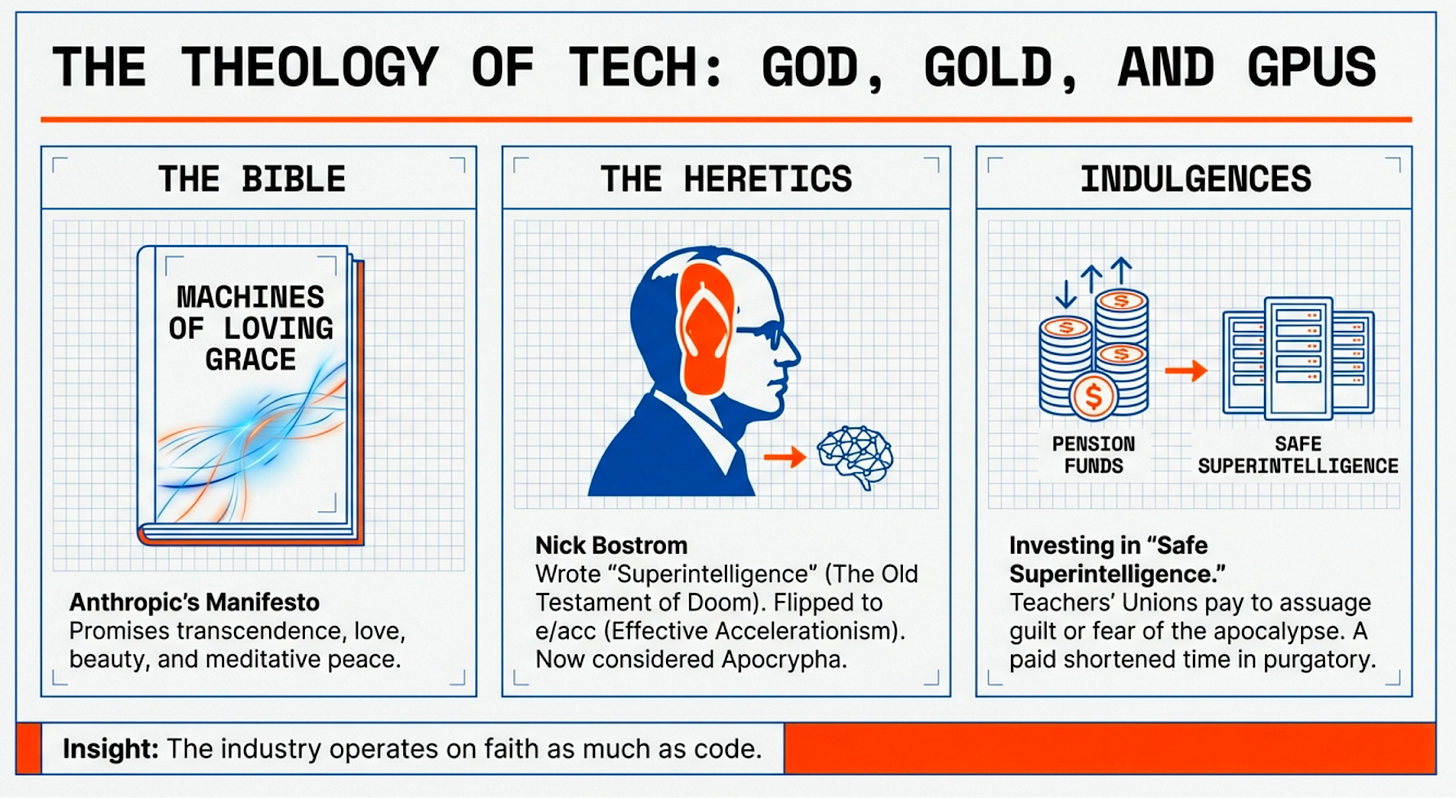

Sam Altman wants to build a digital God and put it in a box.

The optimists think this box will work like a vending machine:

The pessimists think it’s a trap.

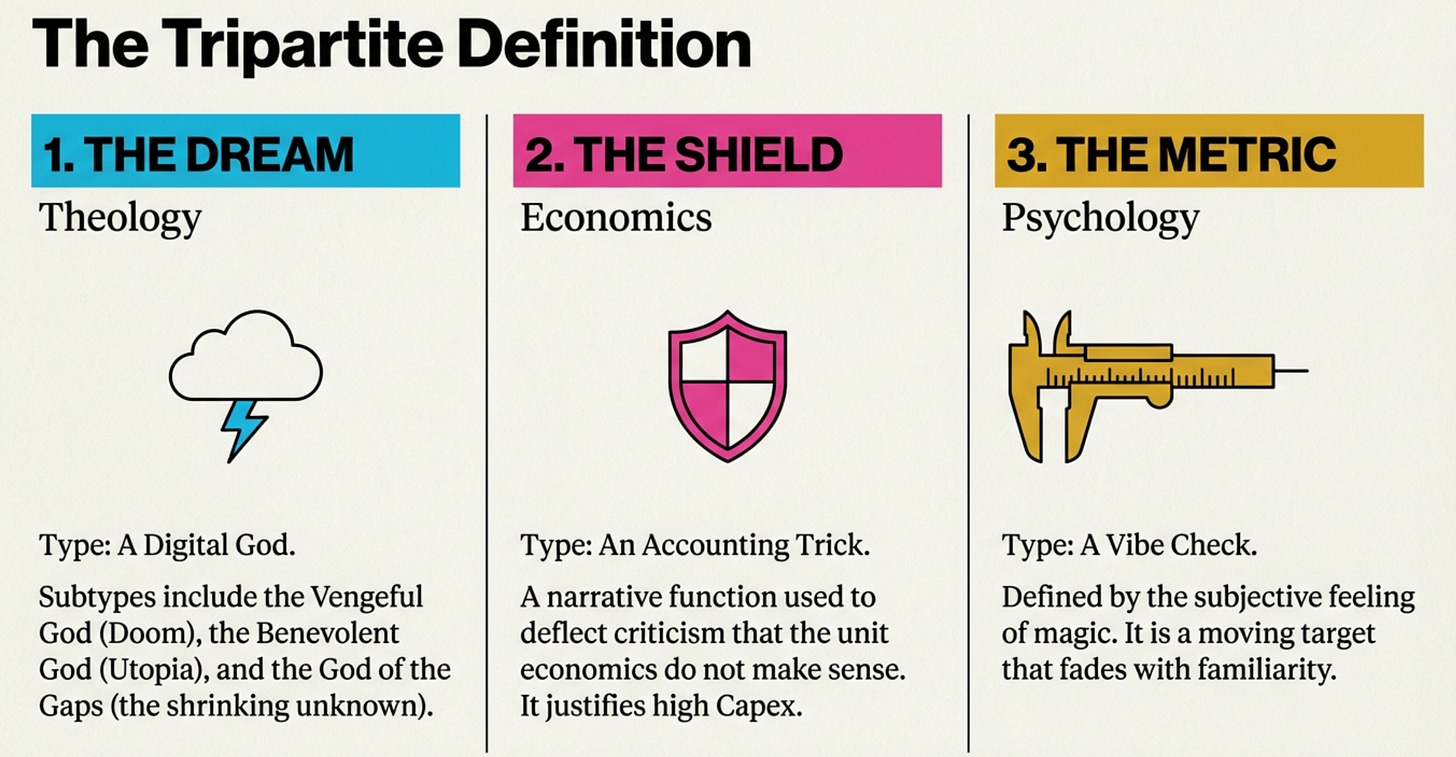

In my early OpenAI days, my colleague catherio organized weekly reading sessions of Nick Bostrom’s Superintelligence. We sat around discussing how to design a box that could contain an all-knowing demon. That book would be the gospel of the industry today, had Nick not turned faction-hopper and switched to e/acc.

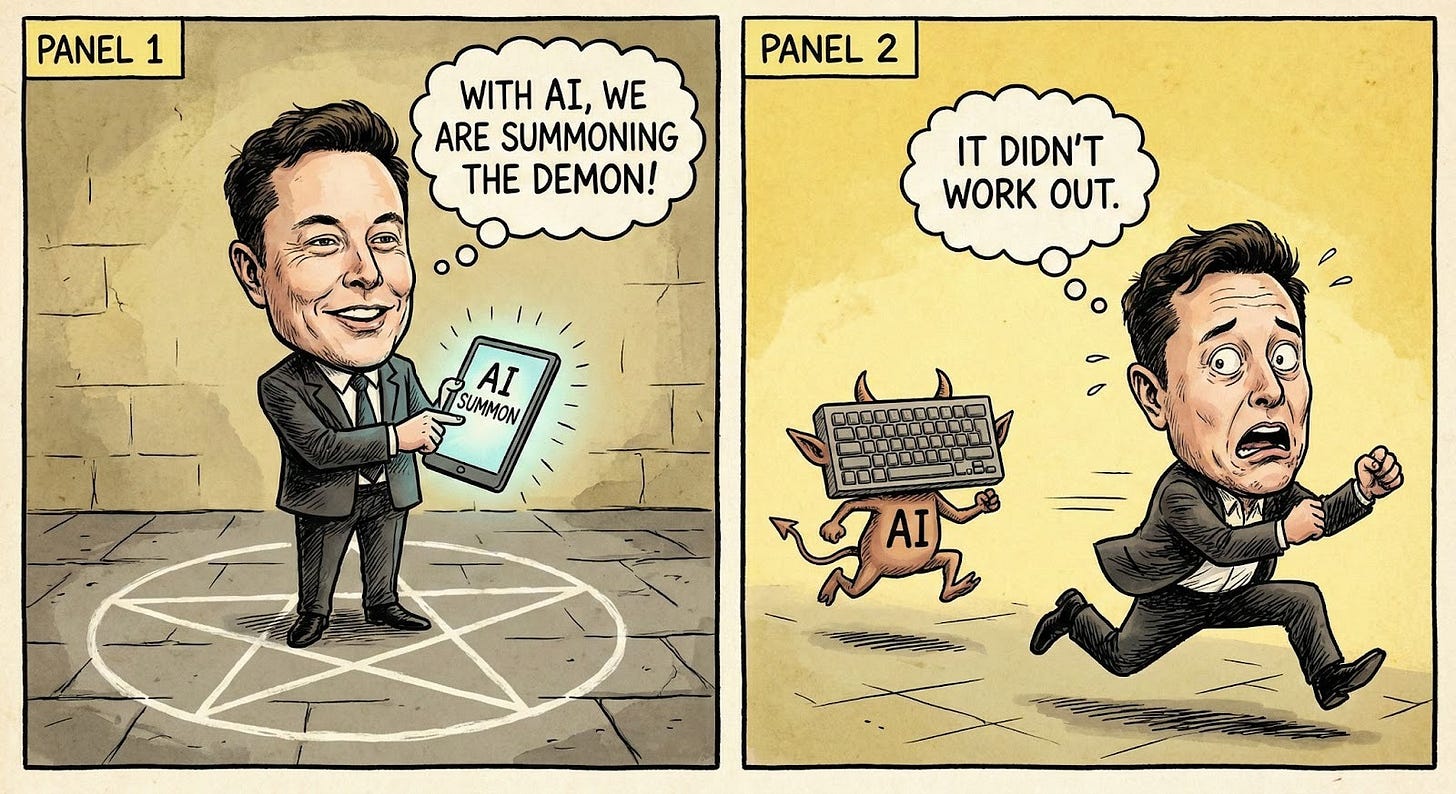

Elon Musk in 2014:

“With artificial intelligence, we are summoning the demon. In all those stories where there’s the guy with the pentagram and the holy water, it’s like—yeah, he’s sure he can control the demon. It didn’t work out.”

An Anthropic friend recently dropped off “Machines of Loving Grace” book and I read it with curiosity.

In contrast to the Old Testament wrath of the Vengeful God, Dario promises the New Testament. The future is bright. According to Dario, we may experience

extraordinary moments of revelation, creative inspiration, compassion, fulfillment, transcendence, love, beauty, or meditative peaceSounds like heaven.

I feel calmer just by looking at this picture.

People don’t like being intimidated. The angry God never polled as well as the benevolent one. Eliezer is getting marginalized; Dario is on the up-and-up. Even the “StopAI” crowd respects Anthropic—they are the “least-bad” of the demon summoners.

I sometimes get asked what my P(Doom) is, and have a hard time answering. P(Doom)=0 implies a lack of epistemic humility. But if not zero, what else? I sometimes ask in response “what is your P(Rapture)?”, and observe a similar difficulty.

This discourse has gone into overdrive recently, propped up by companies relying on “god in the machine” narratives to rationalize their astronomical valuations.

When people ask me what I think about the timeline to build a digital God, the answer is that I don’t think about it. I’m not a religious man.

Before the latest religious swerve, AGI has quitely existed as the “God of the Gaps”.

McCarthy called chess the DROSOPHILA OF AI, the fruit fly we study to understand the species. He considered it AGI-complete. That seems strange now, but at the time, AI was about search and the chess search tree looked infinite; navigating it seemed magical.

When Deep Blue defeated Kasparov in 1997, he refused to believe that the machine had beaten him. He accused the organizers of smuggling in a human to assist the machine. Ironically Kasparov was the best human player at the time.

This was the first instance of the machine passing the Turing Test. Or was it Eliza in the 1980s?

Following the Deep Blue victory, AAAI had a discussion panel on the implications. One of the participants “felt the AGI”, expecting Deep Blue technology to quickly deprecate human labor.

Others were more demanding. They pointed out that computers followed the rules and weren’t creative like humans.

Mark Riedl proposed to address this Lovelace 2 test. Give agent instructions like “Create Van Gogh-style picture of a penguin holding a bucket of ice”

I’ve hand-drawn the picture below and will be monitoring the progress of AI on the updated benchmark

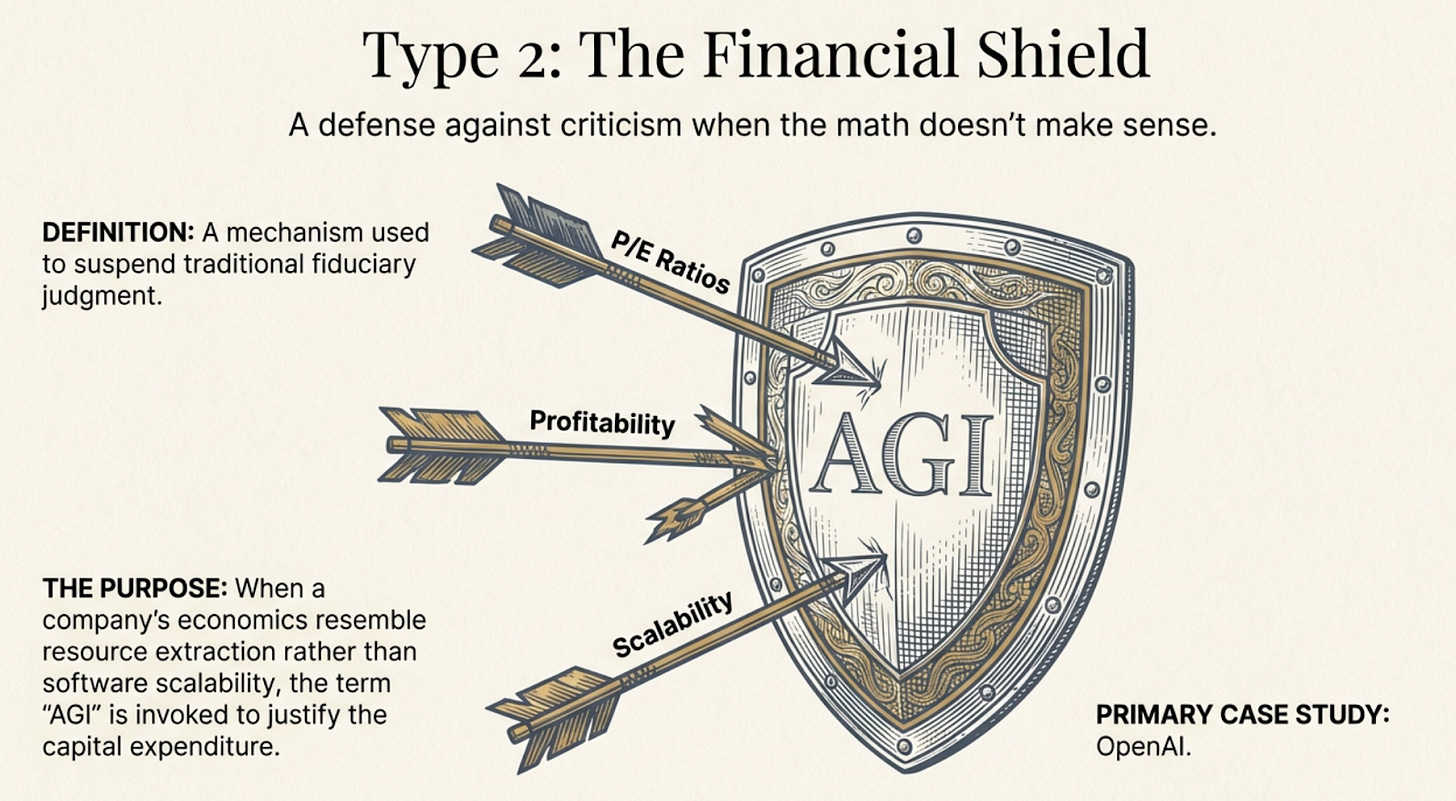

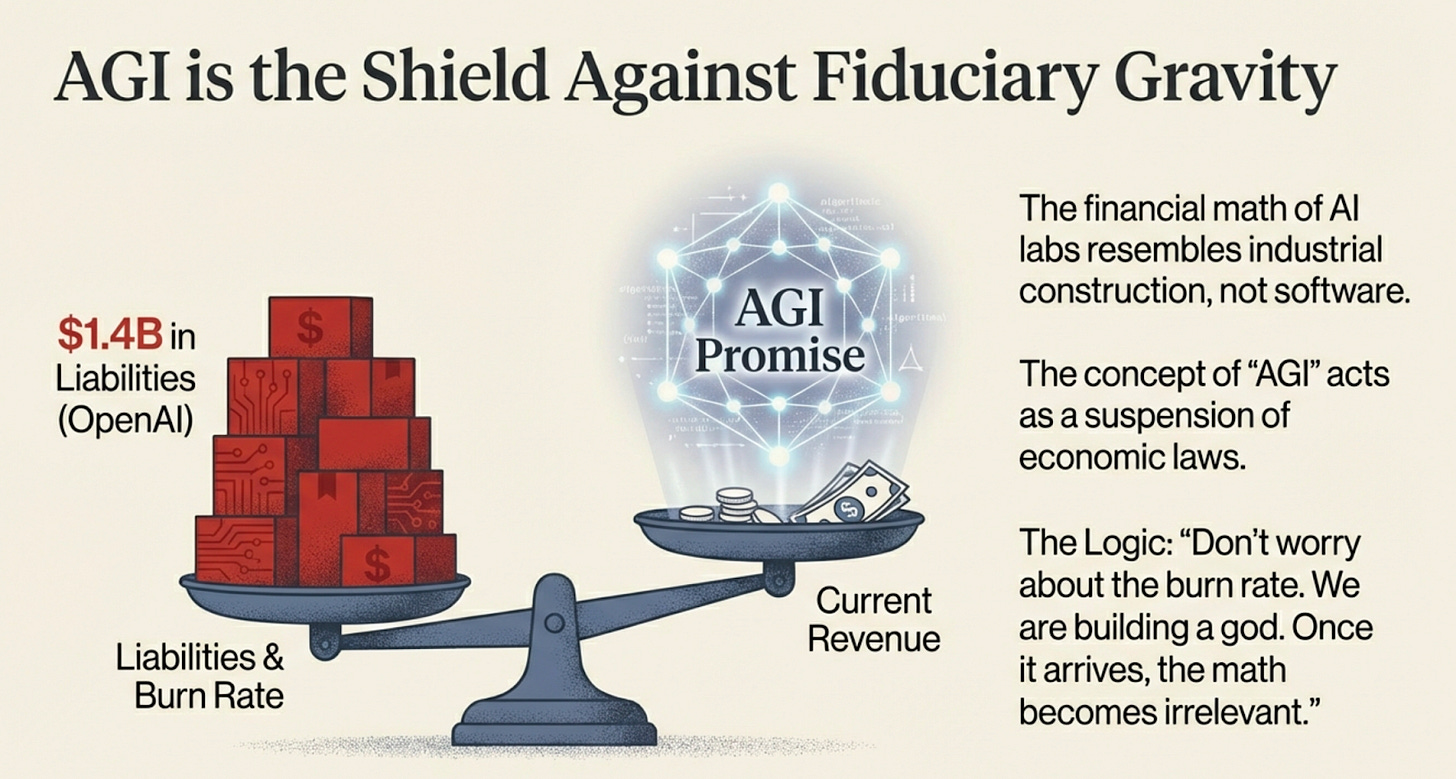

This is the second kind of AGI. It is a financial maneuver—a shield used to deflect the criticism that the math doesn’t make sense.

A few people wrote about the math, Montgommery, HSBC.

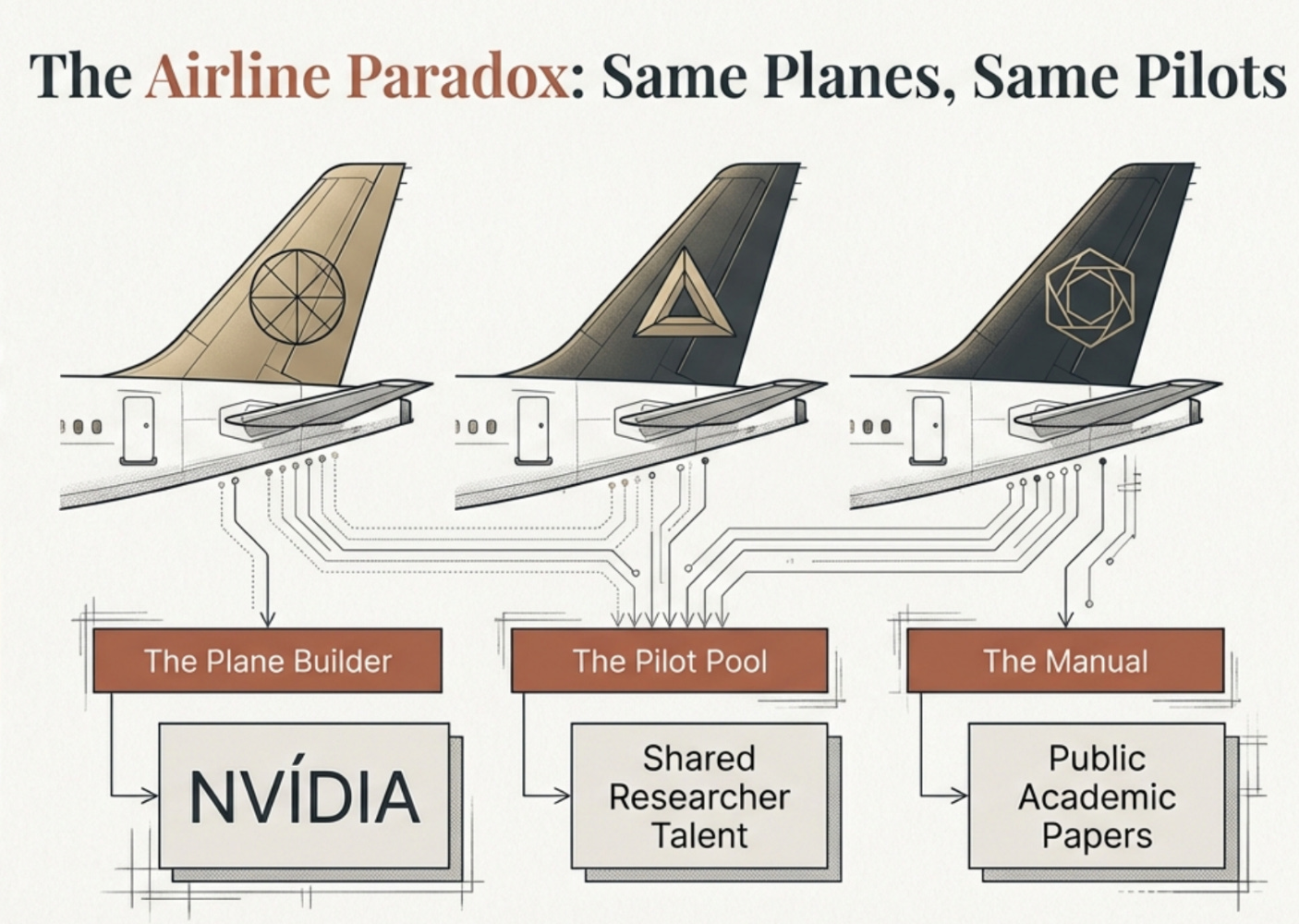

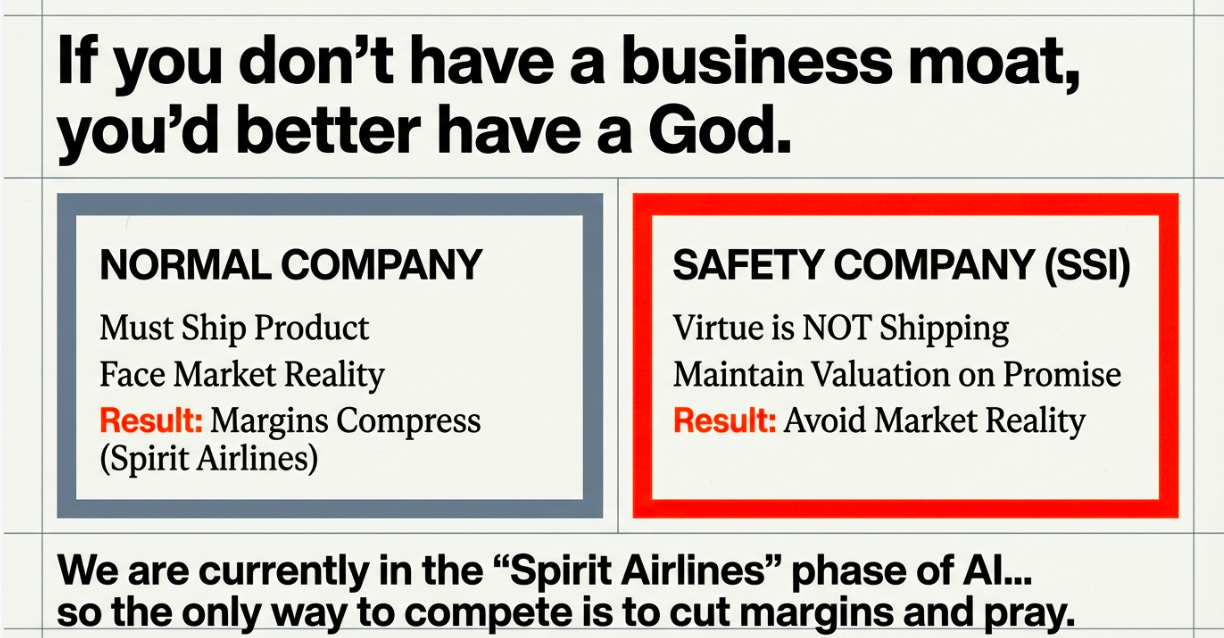

The core problem is that AGI labs are high capex and low margin, making them more like airlines than software companies.

A friend put it best — AGI labs are Spirit Airlines with a Messiah Complex.

They buy the same planes (GPUs) from the same factory (Nvidia). They hire pilots from the same pool. It is a brutal, commodity business. The main difference is that Spirit Airlines doesn’t promise that the plane will eventually turn into an angel and cure death.

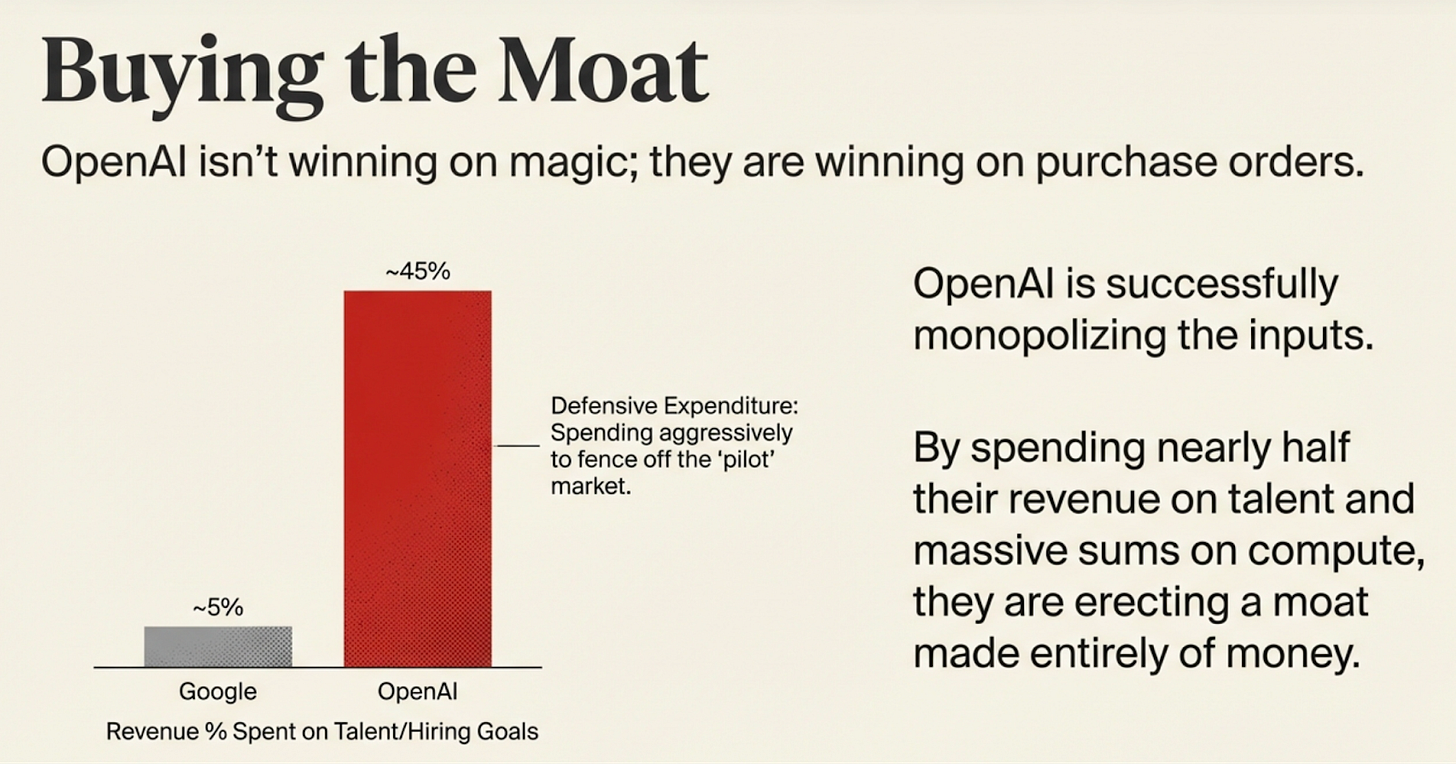

OpenAI had a 2-year head-start with the launch of GPT-4, to build a significant moat. They didn’t. Credit cards on file form a minor moat, but turning this into sustainable business requires curtailing growth ambitions.

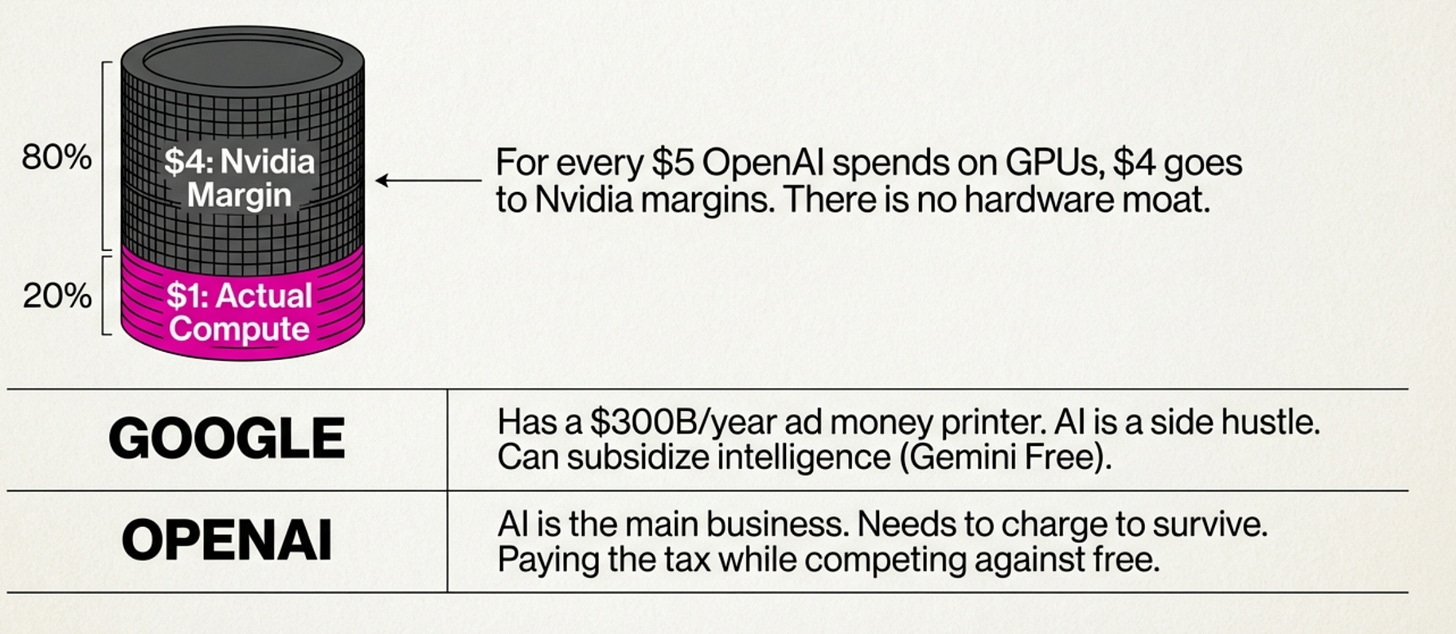

They are now paying “Nvidia tax” while competing head-to-head against Google which has its own hardware. Nvidia margins are 80%, so for every $5 OpenAI spends on GPUs, they only get $1 worth of compute. Remaining $4 goes to fund an Nvidia employee’s third vacation home in Marin.

Nvidia is generous with its biggest customer, promising to invest 100B into OpenAI. This is half of $207B that OpenAI needs to raise by 2030. This gives Nvidia leverage – they can threaten to pull back if OpenAI starts getting friendly with alternative chip-makers, helping it lock OpenAI into the 80% tax forever.

Google already has a 300B/year ad money printing machine, so they don’t need to make money on AI. They can keep the prices low enough to make sure nobody else makes money.

Currently I’m paying $250/month for Google Ultra, because of a family sharing bundle, but I still use the free version of Gemini for most tasks. The free version is good. Google is willing to hand out the top-line intelligence for free because it’s a side hustle. AI labs need to charge for it because it’s their main business.

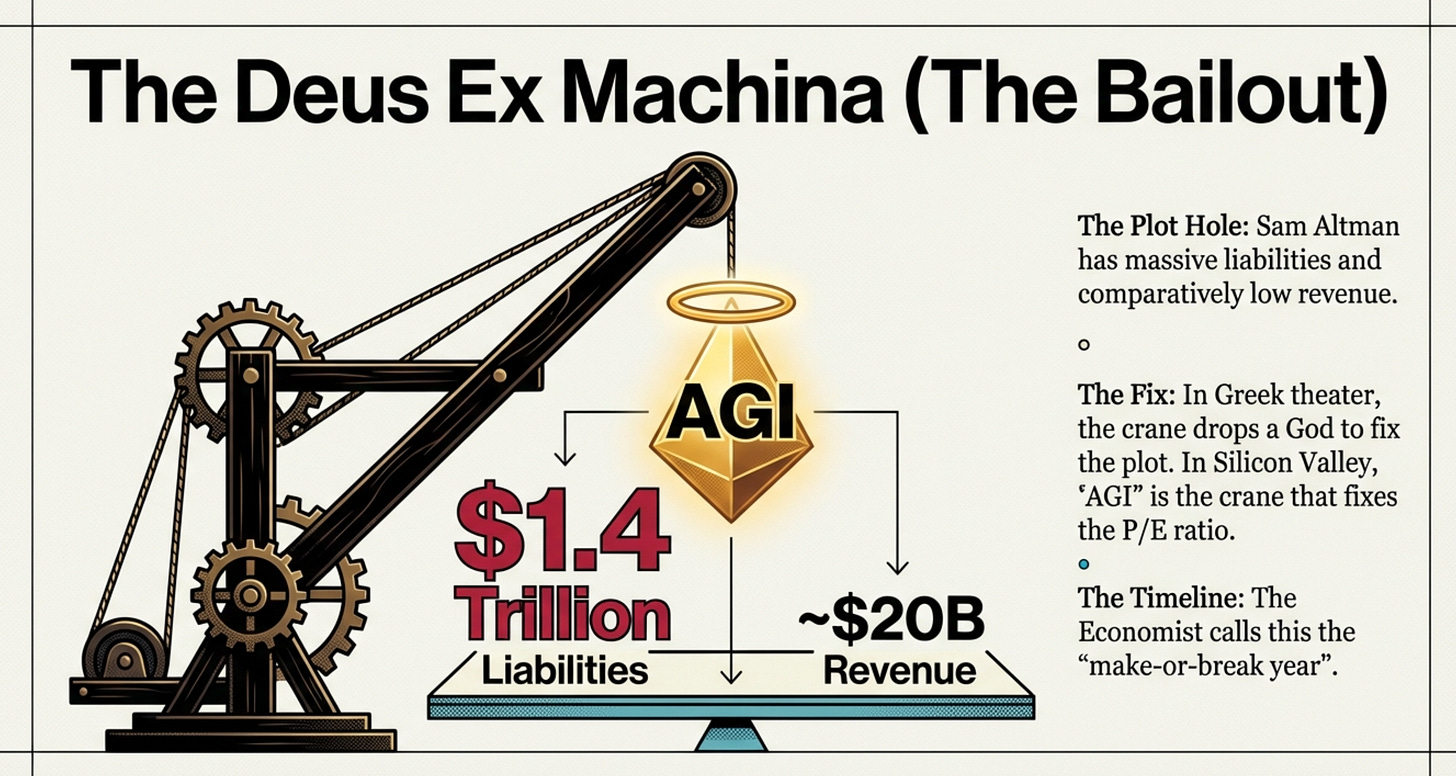

Sam signed up for $1.4 Trillion in liabilities while sitting on ~$20B in revenue. He originally wanted $7 Trillion, but boring people talked him down.

To explain the math you could invoke the Deus Ex Machina. In Greek theater, when the plot got stuck, a crane dropped a God on stage to fix the ending. In Silicon Valley, AGI is the crane. It balances the spreadsheet. AGI=Account Gap Insurance.

Last November, they even tested the waters for a taxpayer bailout..

The glorious future is 5 years away, and everybody should chip in. I’ve heard that somewhere.

This drew backlash and the comments were quickly walked back, so it’s currently unknown as to how OpenAI would get themselves out of this pickle. Perhaps an Nvidia bailout?

The Economist called it the “make-or-break year” for OpenAI.

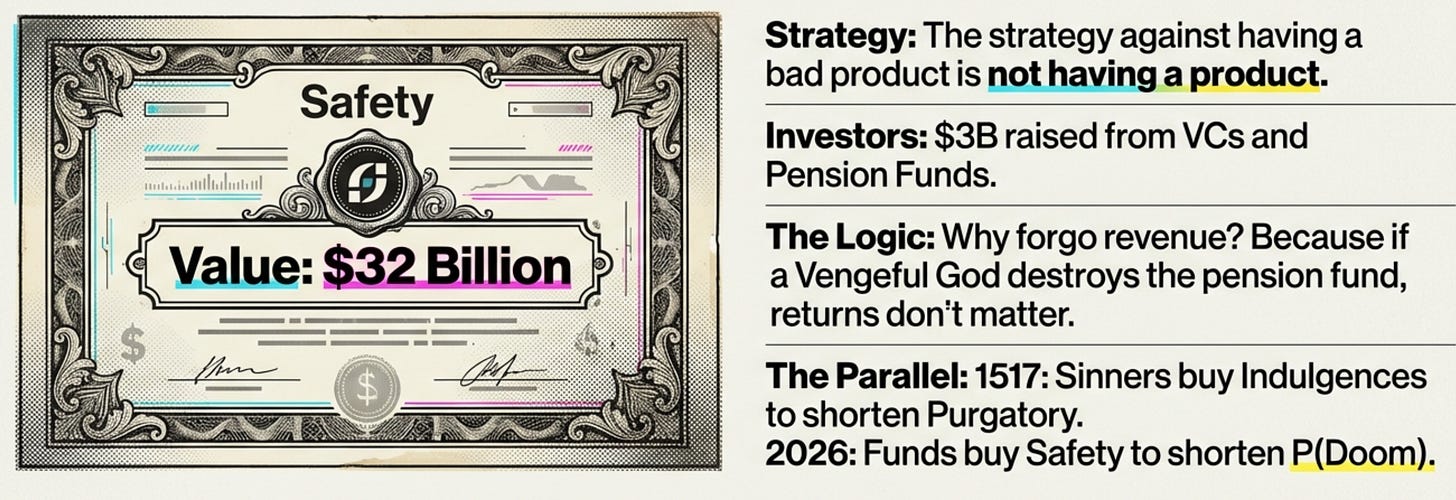

Then there’s Safe Superintelligence Inc. Their strategy against having a bad product is not having a product.

SSI incorporated as a traditional for-profit-corporation and took $3B from VCs like Sequoia and Fidelity. These, in turn, manage money for LPs like California State Teachers’ Retirement System.

VCs are legally required to invest in companies that generate returns. A typical VC multiple is 10x. SSI is currently at 32B. Do they have a plan to grow to $320B?

Ilya is not saying.

Why would the head of a for-profit corporation act like a leader of a monastic lab? AGI.

There is no point in financial returns if a Vengeful God destroys the pension fund. In 1517, sinners bought “Indulgences” from the Pope to shorten their time in Purgatory. In 2026, Pension Funds buy “Safety” from Ilya to shorten their P(Doom). It is a carbon offset for the apocalypse. As long as the Shield holds, we have Type 2 AGI.

When Gemini 3 came up, the jump in performance was so significant, it led to people announcing declaring Gemini 3 as AGI.

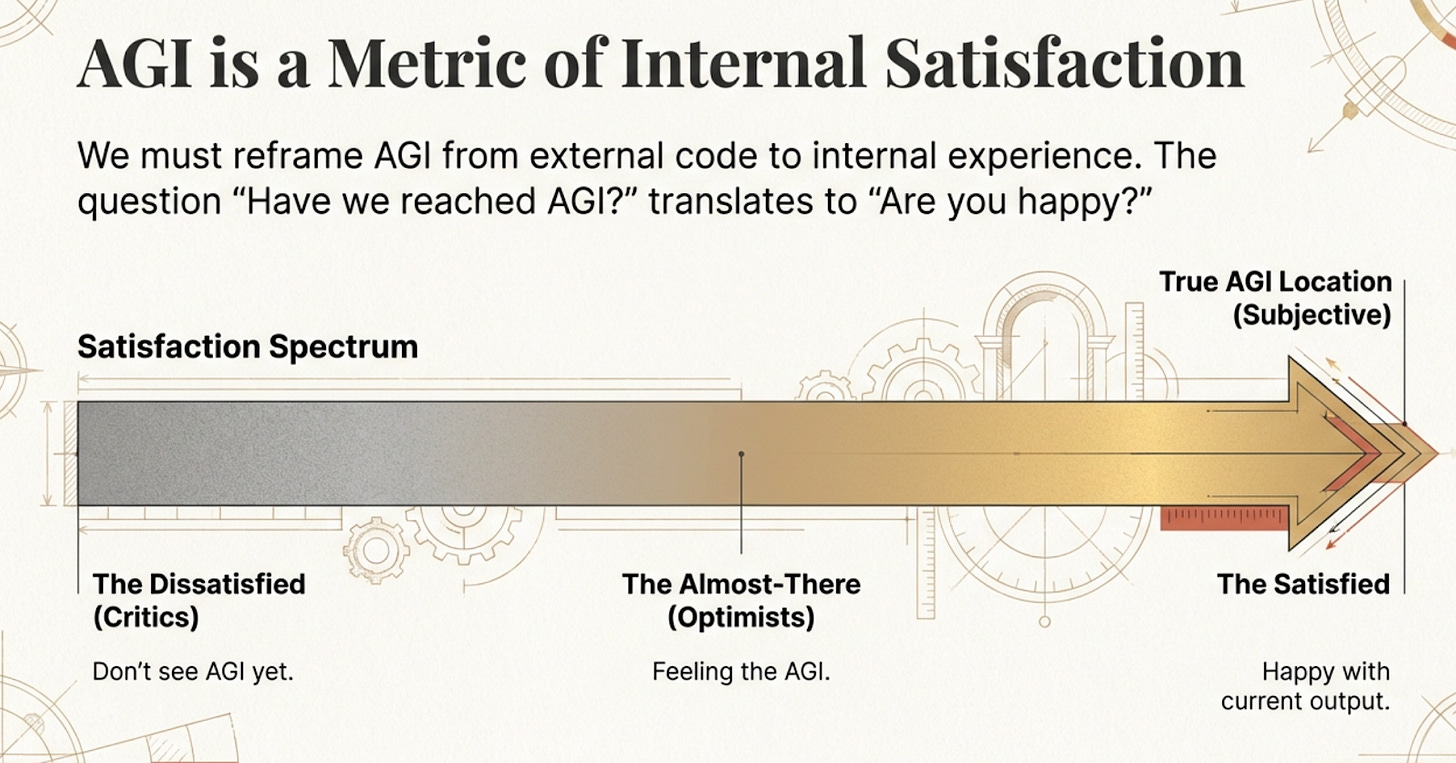

This is the third type: AGI is just the computer giving us what we want. It is a personal metric. When we get what we want, we have AGI. When we get used to it, we don’t.

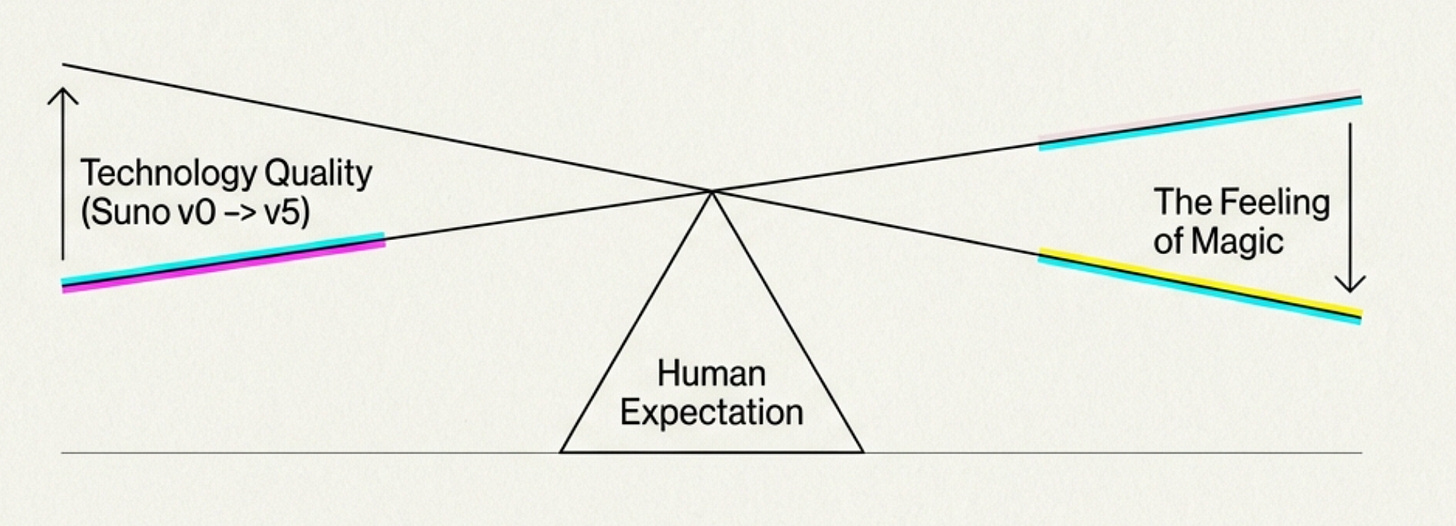

I personally had a “feel the AGI moment” when I first tried Suno. Going from “produce your own songs” to Suno v5 is dramatic.

Looking at my RescueTime activity history I realized I spent several days regenerating songs, and thought for a second that I had gotten reward-hacked. But then I considered the social component and decided that it was worth it.

(by the way, please contribute to my Science Radio, I want to have something to listen to while I make breakfast.)

The number of people who “feel the AGI” increases as tech improves. But as tech improves, our expectations rise, and the number drops again. It is a seesaw.

We may see this back-and-forth on “Type 3” AGI, until we reach the limits of human imagination.