Meta’s next-generation large language model, Avocado, is drawing industry attention even before its official release. According to internal assessments, the model has already surpassed the performance of today’s leading open-source AI models, despite being evaluated at the pretraining stage only.

Avocado is the flagship model under development at Meta Superintelligence Labs, or MSL, the advanced AI research unit launched by Meta last year. Internally, it has been described as the strongest pretrained model the company has ever built.

Competing without post-training

Foreign media reports say Meta completed Avocado’s pretraining in January. Internal documents indicate that the model has not yet undergone reinforcement learning from human feedback or alignment tuning. Even so, Avocado is said to match or rival fully post-trained models in areas such as knowledge reasoning, visual understanding, and multilingual processing.

Meta insiders claim Avocado outperforms the highest-performing open-source models across most benchmarks. This is notable because pretrained models are typically designed for general understanding, with real-world usability emerging only after post-training. Achieving this level of performance at the pretraining stage alone is widely seen as unusual.

A strategic shift after LLaMA 4

Avocado’s emergence reflects a broader shift in Meta’s AI strategy. The company faced setbacks during the development of LLaMA 4, which had been positioned as its next major model in 2025. After delays, several derivative releases failed to meet expectations within the developer community, fueling internal concerns.

In response, Meta restructured its AI organization. In June last year, it invested approximately $14.3 billion in data-labeling firm Scale AI and brought in CEO Alexandr Wang. Under his leadership, Meta Superintelligence Labs was established, with Avocado representing the first major outcome of this reorganization.

Efficiency becomes the real headline

Beyond raw performance, Avocado is gaining attention for its computational efficiency. Internal materials suggest the model delivers roughly ten times higher efficiency than Meta’s previous model, Maverick, in text-based tasks. Compared with Behemoth, the largest unreleased model in the LLaMA 4 family, the efficiency gap is said to exceed 100 times.

Meta attributes these gains to higher-quality training data, heavy investment in large-scale infrastructure, and the adoption of deterministic training methods. By tightly controlling training conditions to produce consistent outcomes, Meta aims to reduce both energy consumption and development costs. As compute expenses become a growing concern across the AI industry, this approach signals a strategic pivot away from brute-force scaling.

Cautious optimism ahead of release

Industry observers remain cautious. All performance claims so far are based on internal evaluations, and Avocado’s true competitiveness will only be confirmed after external release. Given Meta’s struggles over the past year, some analysts warn that expectations may be running ahead of validation.

Still, confidence is evident among Meta’s leadership. CTO Andrew Bosworth recently said at the World Economic Forum that Meta’s AI models are technically very strong, while noting that large-scale post-training is still required before consumer deployment.

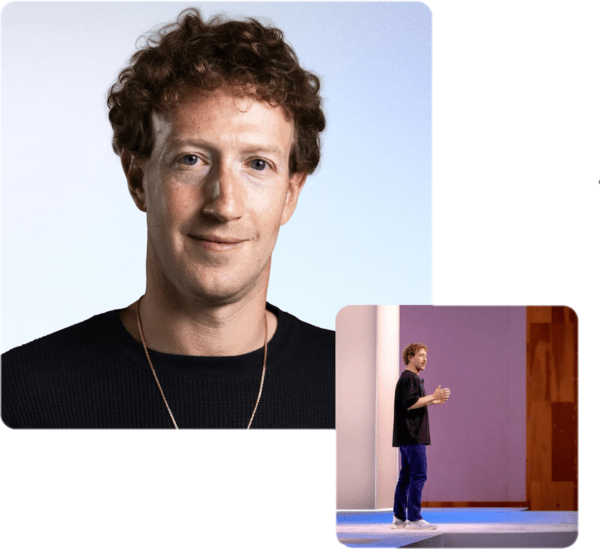

CEO Mark Zuckerberg echoed that sentiment, saying the first models released by MSL would demonstrate how quickly Meta’s AI capabilities are advancing, and signaling a steady rollout of new models this year.

Meta has not announced an official release date for Avocado. However, sources suggest it could debut in the first quarter alongside Mango, an image and video generation model. Whether Avocado can truly prove that pretraining alone is enough to beat top open-source AI models is now shaping up as the next major question in the global AI race.

by Ju-baek Shinㅣjbshin@kmjournal.net