I am as ASI-pilled as the next guy. I won’t rewrite everything; I’ll just say that Leopold Aschenbrenner, if anything, was too pessimistic. Reinforcement Learning and Inference-time Scaling have worked much better than most people would have thought possible a couple of years ago.

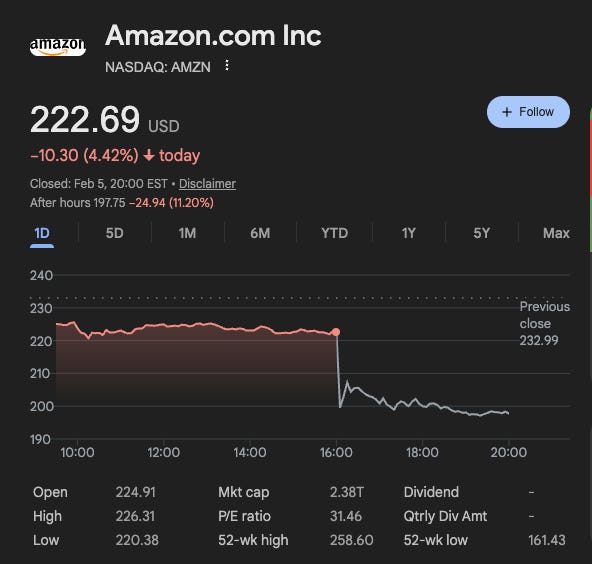

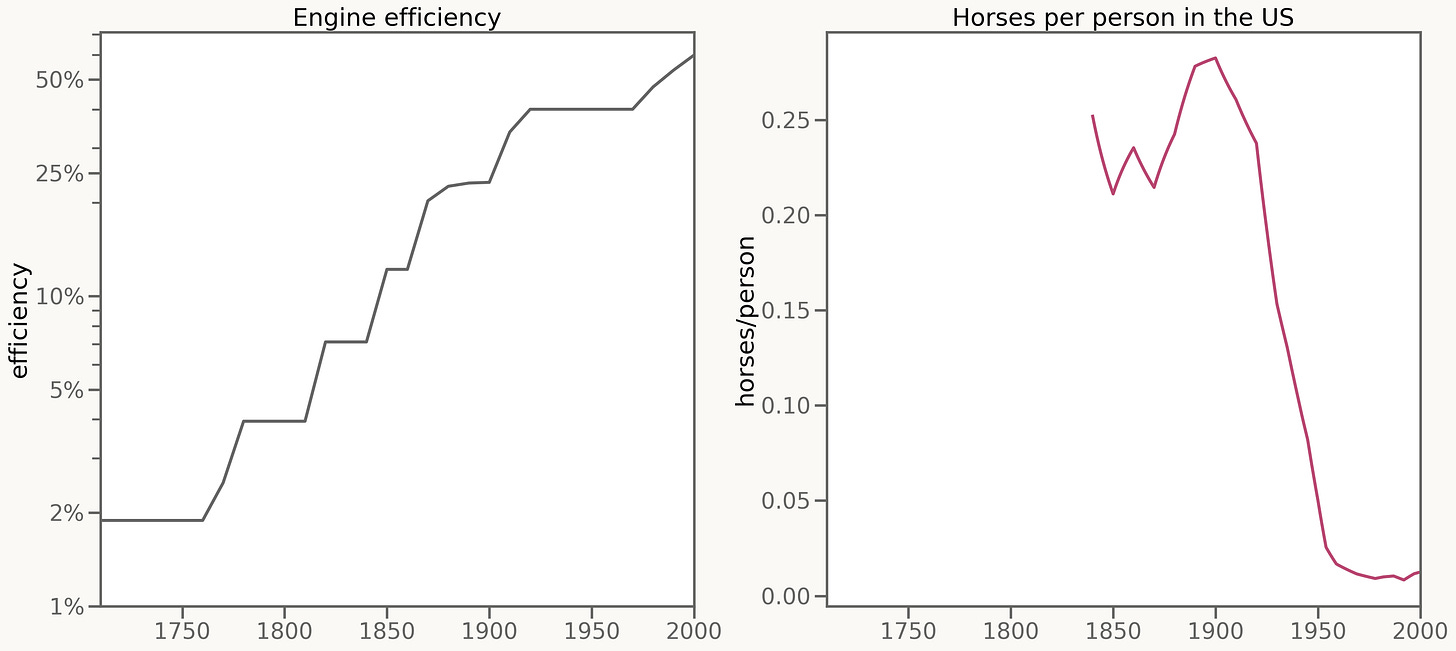

These days, the market seems to have both concluded that AI will disrupt software, services, and data providers, and that big tech companies such as Amazon and Alphabet writing $200B capex checks are bad.

To some extent, this is counterparty risk: the market is spooked that OpenAI and Anthropic are money-losing enterprises.

To some extent, this is ROIC risk. While everyone understands that if you are selling H100 at retail prices, you find 25%+ IRRs, people also understand that if you sell GB200s in 5-year contracts to OpenAI, you’re probably not making 25% IRR.

All financing risk is noise. No one is concerned that Oracle will have trouble financing. People are concerned that Oracle’s contract with OpenAI isn’t great, and it isn’t worth financing.

The same goes for all the depreciation talk. Physically, the GPUs most likely work for 7 years just fine. Companies and investors are in trouble if we overbuild during this decade. Of course, you can frame this as a depreciation question. But the question simply is: will people still want to buy GB300s in 2031? If we are not in oversupply of compute, the answer most likely is yes.

But the reaction to Google’s capex guidance, basically indicating they are wiping out Free Cash Flow, goes further. Just a fraction of Google’s capex will go to rent bare metal GPUs and TPUs for their Anthropic mega-deal. The market is telling you:

-

I think you are overbuilding compute

-

I don’t think we have space to revise AI Capex numbers higher for ‘27

-

To revise AI Capex numbers higher for ‘27 and ‘28, the AI Labs will need to make revenue, while they will collectively exit ‘26 at “just $110B runrate.”

-

AI is a commodity, and high gross margins for the labs are a temporary phenomenon.

The market has been consistently very wrong about AI over the past six years; it shouldn’t cause you any surprise that the market could be wrong again.

The goal of this short post is to raise some questions. Maybe in the future we can debate their nature. Make it clear: I am still in the AI absolutist camp, just not sure of whether I am in the AI bull side anymore. I don’t want to pretend that any of these arguments here are super elaborated.

In my still-unpublished guide to AI investing, I argue that you should track capabilities, not revenue, because AI revenue is extremely lagging behind capabilities. And I spend double-digit percentages of all my waking hours tracking capabilities. They have only increased dramatically.

But it’s truly annoying that the graveyard of businesses that are perceived as dramatically impaired by AI is long, from Chegg and StackOverflow, to the entire software industry, the entire IT services industry, and now the gaming industry! At some point last year, even Google was lumped with that group.

Besides the AI Labs themselves and the companies selling picks and shovels, no one seems to have created something cool with AI that the market will value at a high price. Maybe I am exaggerating, maybe Palantir, AppLovin, Walmart, and J.P. Morgan are some examples that qualify.

My point here is: no company has created a demo that the market has said, “Oh, cool! You created something with AI, and now you’ll make a lot of money!” At some point, the market thought Microsoft 365 Copilot was that product.

Don’t get me wrong: Boeing and Airbus are successful companies selling their technology to an industry that only destroyed shareholder value for decades. Maybe OpenAI, Anthropic, and Google DeepMind can be successful themselves while the people buying their tokens don’t create shareholder value.

What is worse: public market investors can’t buy the Labs, and Google DeepMind is attached to a business that may or may not be getting disrupted. And it already trades at high-20s anyway.

But as we need to start to pencil out high single-digit hundreds of billions of dollars in AI revenue in the early 2030s, it’s hard to get there without success stories.

2025 was the year of the DeepSeek moment and the year of the Claude Opus 4.5 moment.

Most people think the success of U.S. State of the Art, in particular Anthropic’s Claude Code and OpenAI’s Codex family, is a vindication against open source.

I don’t plan to stop using U.S. SOTA models anytime soon, but if we are being honest with ourselves here, most people won’t have needs that will require the models launched in late 2027, if the current trends hold.

We already see that with OpenAI, with a different family of coding models, and to serve general audiences. For 90% of the public, ChatGPT and Gemini are as good as they’ll ever need. At some point, 90% of vibe coders will have models that are as good as they’ll ever need.

At some point, you’ll have open-source models you can buy for a fraction of the price of Claude Opus 8 that will solve your problems. And when that happens, and it might happen quickly, you’ll have AI Labs that never turned a penny in profit selling tokens that will need to find new business models.

In some sense, this is an open secret in San Francisco, and everyone understands you won’t be able to turn a profit by selling tokens. Alphabet’s Isomorphic Labs is the footprint of the future: you have some private AI/ML you only have access to; you use it to create an insane medicine only you can create.

I like to joke that Anthropic’s business model is:

-

Focus all your scarce resources into make a LLM good at coding,

-

Sell the LLM to others good at coding to make some money and get some data,

-

Create a proto-AGI LLM that can trigger a reinforcement self-improvement loop

-

Create a super-human programmer

-

Ask it to solve humanoid robots

-

Fabricate an army of humanoid robots

-

Charge you for the robot to cut your hair

-

Profit

This is extremely tongue-in-cheek. But if you listen to Leopold Aschenbrenner, Demis Hassabis, or Elon Musk, they all see the labs becoming something very different.

Of course, this might happen. Maybe you can, in fact, create Claude Opus 7, ask it to solve humanoid robotics, it does solve humanoid robotics, and you turn a profit.

In reality, the shift in business model will need an immense amount of execution to transition gracefully from one revenue stream to another. If you thought the market had a hard time transitioning from perpetual software licenses to SaaS, imagine if the market is asked to believe that Isomorphic Labs can create trillions of shareholder value only if their great patented drugs go through the 10-year FDA approval life-cycle.

The big theme of this post is that the timing of the benefits of AI can and will be mismatched from the cons of AI.

If we get a software-only singularity: a super-human programmer, this will have immense effects on white-collar employment. And it could happen a decade or more before the home robot that can clean up our rooms, or before the magic pills that will make us healthier, happier, and live longer.

If that’s the world we are living in, you could see a recession, big impacts in consumer spending, that can and will affect the cash cow businesses of all the big techs.

Not only that, but as the exponential gets clearer, we could even see savings rates going up as more people get scared by AI.

Another point is: once we have automated our stuff, we will ultimately build software that can run deterministically in cheap hardware. Maybe, for a few years, we will go through a lot of one-off spending in AI to automate stuff, but by year 5, the person doesn’t have many thing they needs to vibe code.

See:

Maybe Gary Marcus is right. Maybe 2026 is the year that deep learning, finally, hits a wall.

I am joking, AI won’t hit a wall in 2026.

But maybe you should entertain the idea that the gains of inference-time scaling and reinforcement learning with verifiable rewards can’t carry us much longer.

Sincerely, I don’t believe this matters much. While we might still have the proto-AGI with jagged intelligence, if the current trends hold for the 12-18 months, the world will have AI systems so much better in coding and AI research that computer science will be a solved problem.

But this might be investment relevant. The labs use the majority of their computing budget in training. As compute hungry as we might be, it could be bad for the AI complex if people think they don’t need to increase their R&D-related compute capacity?

Toby Ord asks: Are the Costs of AI Agents Also Rising Exponentially? It’s a great post, and I won’t rewrite it here.

There’s some moderate evidence that YES, the cost of a model capable of doing the task that takes 4 hours in the METR benchmark costs substantially more than 4x the price of the model capable of doing 1-hour tasks in the METR benchmark.

What I can say is that my own spending has been 10x-ing per year since 2022, from $2/mo in 2022, to $20/mo in 2023, to $200/mo in 2024, and to $1,500/mo in 2025. I think I got more productive, but I doubt my employer will have the disposition to allow me to spend $10,000/mo with LLMs.

There’s no problem. The costs of AI, for a fixed level of intelligence, decline 90% per year. This means that while at the end of 2026, I might have hit a limit of what I can do, at the end of 2027, I’ll have access to intelligence that was SOTA at the end of 2026. And at the end of 2029, I’ll have access to 2028 SOTA.

Obviously, only a fraction of people spend thousands of dollars per month on AI. But if that’s the case, while we might not hit a wall, the models won’t be able to be bought by most people.

(This is much more complex. They can offer you the correct amount of intelligence and cost mix so that they are still competitive with open source. This is a difficult needle to thread for them, and it’s just another execution challenge they might face.)

This is too much uncertainty! I recommend everyone to read Julian Schrittwieser’s short essay Failing to Understand the Exponential, Again, many people are feeling like this is February 2020 again, and the public is not awakened. Obviously, financial markets know better. They might ask for higher equity risk premiums and derate everything that we don’t have clarity on economics 5-years out.

If the balance of risks seems to point towards deflation and unemployment, why wouldn’t you want to park your money in fixed income and wait? Maybe you can hedge some of the inflationary risks (Taiwan Strait crisis, Greenland crisis), maybe you can buy some OpenAI/Anthropic equity in the secondary markets, and wait for the uncertainty to clear.

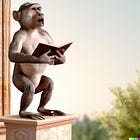

This essay, Horses, by Andy Jones, nails the point:

Engines, steam engines, were invented in 1700.

And what followed was 200 years of steady improvement, with engines getting 20% better a decade.

For the first 120 years of that steady improvement, horses didn’t notice at all.

Then, between 1930 and 1950, 90% of the horses in the US disappeared.

Progress in engines was steady. Equivalence to horses was sudden.

We are exactly at the point where 6-75 years of steady exponential progress in computing/machine learning are making computers considerably better than humans in a wide range of tasks.

If you want to take risk, ask yourself: can you pinpoint the future?

Now that they are finally spending 100% of their Operating Cash Flow on AI Capex, further revisions can only happen if the rest of the economy’s AI spending materializes. The burden now is much higher: you don’t need to convince Larry Page, Mark Zuckerberg, Satya Nadella, and Masayoshi Son. You need to convince the entire world to send dozens and soon hundreds of billions of dollars per year to Google DeepMind, Anthropic, and OpenAI so they can continue to increase their capex.

Visibility over capabilities seems clear. Visibility over the economy, not so much. Good luck out there!