Real Workflows People Are Running

The pattern I keep seeing is developers using Amp for work they know how to do, but don’t want to spend time on. Refactoring a component architecture across 11 files. Implementing an eval collection mechanism across 44 files. Migrating from one library to another. These are tasks where the hard part is keeping all the moving pieces consistent, not figuring out what needs to happen.

I have started small. Deep work is great for thorough investigations, like a full SEO code review to see where we can improve. Or check whether we have any security issues, such as XSS.

The Walkthroughs Feature Actually Makes Sense

Amp generates shareable walkthroughs that show exactly what changed and why. These aren’t just git diffs with comments. They’re interactive diagrams showing the flow of changes through your codebase. When an agent makes 50 file changes, you need a better way to review than scrolling through a massive diff. The walkthrough shows you the logic flow, which files connect to which, and why each change was necessary.

This is particularly useful for code review. Instead of asking your teammate to understand 1,200 lines of changes across 47 files, you send them the walkthrough that explains the migration strategy and shows the dependency chain. They can drill into specific files when they need detail, but the high-level view makes the review actually tractable.

What They Got Wrong And Fixed

Amp launched with a Tab feature that autocompleted code as you typed, similar to Copilot. They killed it in January, which is the right call. Autocomplete is a solved problem. Every editor has it. The differentiation isn’t in suggesting the next line of code, it’s in the agent taking over entire tasks so you don’t have to think about implementation details.

They also removed custom commands in favor of skills, which appears to be their plugin system. This is probably the right architectural choice even though it breaks existing workflows. The trend across all these AI coding tools is moving from imperative commands toward declarative goals. Instead of “run this script, then do that thing,” you tell the agent what you want, and it figures out the steps.

The Pricing Model Is Clever

The free tier gives you 10 dollars of API credits per day, ad-supported. That’s enough to do serious work. Some users were exploiting this, so now you have to request it. When you hit the limit, you pay as you go with no markup on the API calls for individual developers. This is smarter than Cursor’s subscription model because it aligns cost with usage. Heavy users pay more, light users pay less, and the free tier is generous enough that hobbyists can build real projects without having to pull out a credit card.

The ad-supported free tier is unusual for developer tools. I haven’t seen the ads yet, so I can’t comment on how intrusive they are. If they’re contextual and relevant to what I’m building, fine. If they’re shoving crypto scams in my face while I’m debugging auth flows, that’s a different conversation.

What This Means For How We Write Code

The workflow change is subtle but important. I’m not using Amp to write code faster. I’m using it to batch boring work so I can focus on architecture decisions and edge cases. When I need to refactor a component that’s used in 30 places, I describe the new pattern and let Amp update all the call sites. When I need to migrate from one API to another, I spec out the mapping and let Amp handle the mechanical translation.

This only works if you trust the agent enough to run unsupervised. That requires good tooling for reviewing changes, rolling back mistakes, and understanding what the agent actually did. Amp’s walkthrough feature and file-by-file change tracking make that feasible. I still review everything, but I focus on intent and correctness, not syntax or boilerplate.

The agents are getting good enough that the bottleneck is no longer code generation. The bottleneck is communicating what you want clearly enough for the agent to build the right thing. That’s a different skill from writing code, and it’s not obvious that being a great programmer makes you great at prompting agents. We’re all figuring this out in real time. Unfortunately, all of this is still far from deterministic, so no agent can run without some serious moderation.

The Security Implications Nobody Mentions

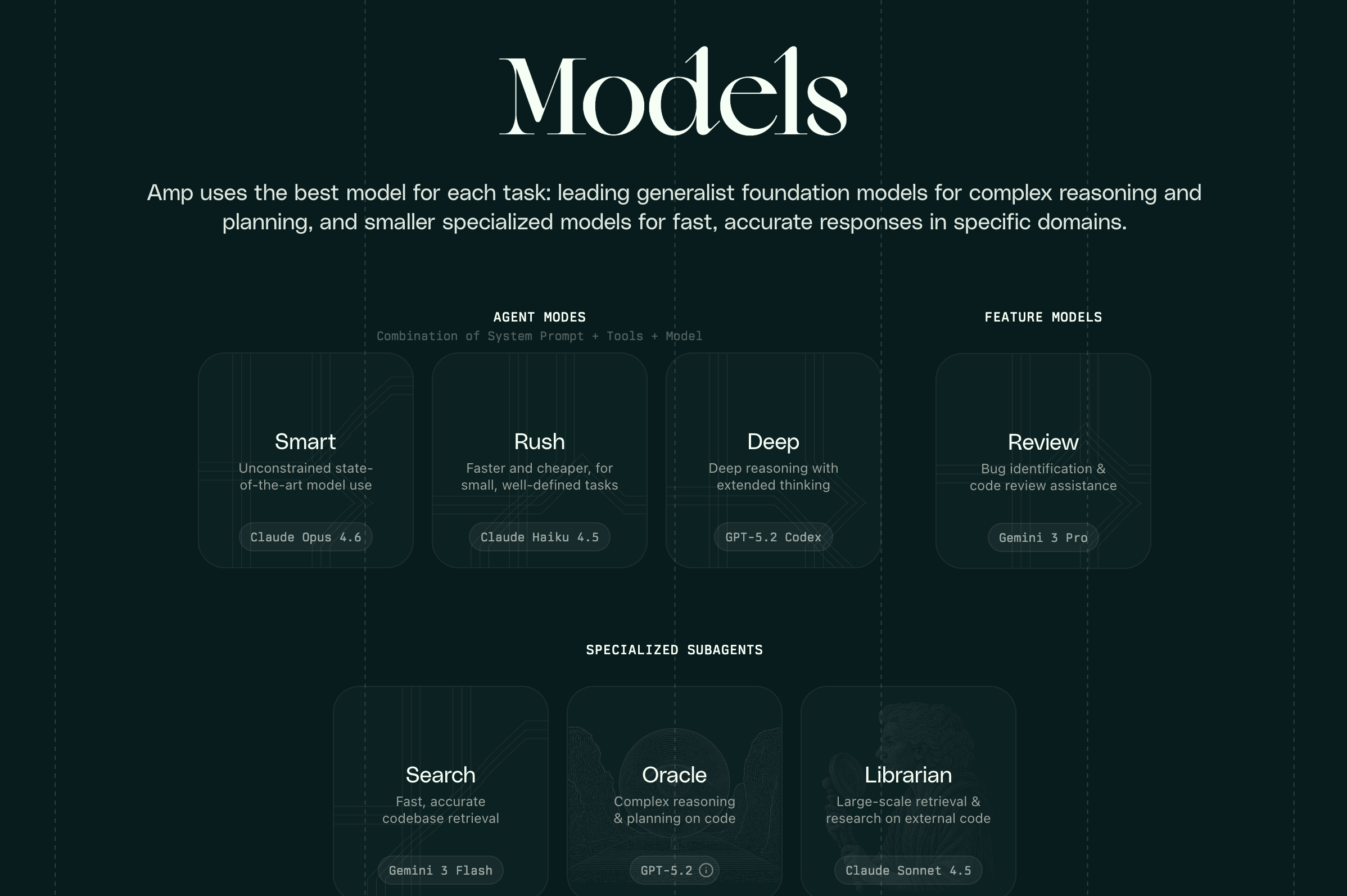

Amp has access to your entire codebase, your git history, and in some configurations, your terminal environment variables. If you’re working on proprietary code or handling sensitive data, you need to think about what’s being sent to which AI providers. Their docs mention support for multiple models, including GPT-5 and Sonnet 4, which means your code may touch multiple third-party APIs depending on the agent mode you’re using.

The testimonials mention people appreciating the “level of polish” compared to other tools, suggesting that Amp is doing something different in how it orchestrates model calls. But the underlying trust model is the same. You’re giving an AI agent write access to your repository and hoping it doesn’t do something catastrophic. The risk isn’t malicious behavior, it’s that the agent misunderstands requirements and makes changes that look right but break subtle invariants.

I’m running Amp on a dedicated branch and reviewing every commit before merging to main. That’s the responsible approach for production code. But I’ve seen people on Twitter running it directly on their main branch, which is either brave or reckless depending on how good their test coverage is.

What Makes This Different From Cursor

I’ve been using both Cursor and Amp for the past month. Cursor is great for inline suggestions and chat-based coding. You’re still driving, Claude or GPT is suggesting. Amp inverts that. The agent drives, and you review. For small changes and exploratory work, Cursor’s approach works better. For large refactorings and systematic changes, Amp’s approach is more productive.

The multi-agent coordination is Amp’s real differentiator. Cursor has a single model helping you. Amp can bring in specialized agents for different subtasks. When I’m migrating a database schema, Librarian searches the docs for migration best practices while Oracle plans out the schema changes. That coordination reduces the number of times I need to interrupt the agent to provide context.

The trade-off is that Amp has a steeper learning curve. You need to understand which agent to use for each task, how handoffs work, and when to use Deep mode versus Standard mode. Cursor is just “talk to Claude in your editor.” That simplicity has value, especially for developers who don’t want to think about agent orchestration.

I’m using both. Cursor for quick edits and pair programming sessions. Amp for refactorings and architectural changes that touch many files. The right tool depends on whether I want to maintain tight control or delegate the entire task.

The Bottom Line

Amp is production-ready for developers who are comfortable reviewing AI-generated code and working on feature branches. The ability to hand off entire refactorings to specialized agents is a real productivity gain for the right kind of work. But it requires trusting an AI to make architectural decisions, and not everyone is ready for that yet. I’m cautiously optimistic that this is where coding tools are headed. We’ll see if I still feel that way after the agent inevitably breaks something important.