Disclaimer: I shamelessly borrowed the title for this post from @sofialomarts insightful post discussed below. @Sofia, If you end up reading this, thank you, and I hope you don’t mind. Let me know if you do and I’ll edit the post accordingly. Also, I would love your feedback. Cheers!

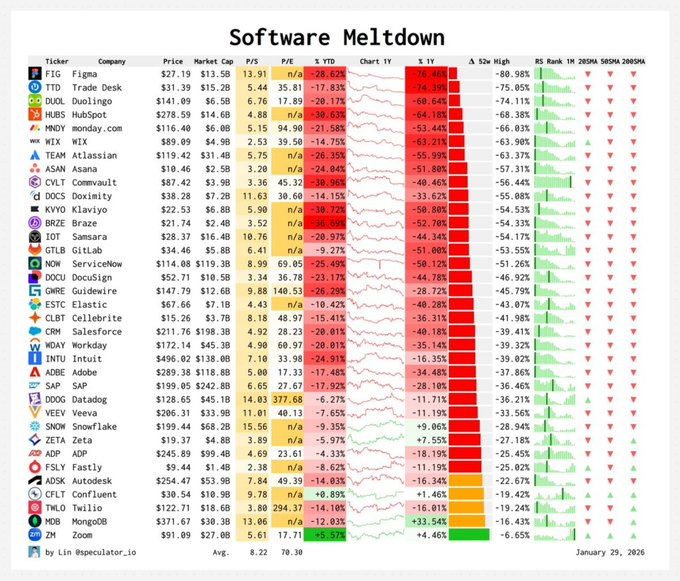

In the past few weeks there’s been a lot of fuss around renowned software engineers not writing a single line of code anymore (including the creator of Claude Code), and some people calling the SaaSocalypse after the SaaS stocks meltdown.

All of this fuss got me thinking about the future of software engineering and knowledge work in general, and how they will look like in a future where AIs are more and more capable. Is this all a big bag of hype or is really our fate to be jobless in the near future?

Fortunately, as I was being flooded with all of this doomerism, I also came across a few really interesting and insightful posts from people smarter than me that helped me form an informed opinion about what is happening in the field. Is there truth in all this hype? Let’s jump right into it!

If you are one of those constantly scared of how AI is evolving, have you thought deeply about what makes you so anxious? Some may mention existential risk, others economic and societal turmoil, but what I feel we are really more worried about in the short term is irrelevance.

This post frames perfectly the complexity of our current fear of AI. And even more if you are working in the field of AI.

“I’m scared of […] losing the momentum and becoming outdated and irrelevant in real-time. That I spent so much time trying to appear knowledgeable that I forgot to actually learn anything.

While everyone around me is building, shipping, making absurd money from glorified wrappers and vibe-coded MVPs, I’ll still be here overthinking, consuming content about AI instead of actually using it, watching my window close in real-time.”.

Who hasn’t felt like that at some point in the past few months? When you open your twitter feed it immediately hits you with a bombardment of news about AI achievements, absurd fundraising events, or yet a new model destroying the previous winner of some benchmark. There is no way to keep up unless we are connected 24/7.

But is this actually true? Think about the people around you (unless you are in SF, in which case you may not be a good example). Does anyone outside of your X feed knows about transformers, RLVR, or the craziness around moltbook? Why aren’t they scared about the pace of AI progress? My wife keeps hearing me talk about all of these things and I don’t see the slightest concern in her eyes.

Is this because we are the first ones that will be hit by the progress of AI and the upcoming automation and it’ll take longer for common mortals to be affected (and thus our worry and their ignorance)? Are we just overreacting to the most recent events? Or do we really know better than them?

“You’re scared of AI, but really, you’re scared of irrelevance, […] or becoming obsolete.”

Sadly, under this mindset, family, friends, hobbies… they are all a distraction if one wants to stay relevant.

“Because in most industries, you can learn something once and coast on that knowledge for years. But in AI? The half-life of knowledge is measured in weeks, sometimes days”.

This excerpt from the post should make you feel a bit better. Spoiler alert: we all feel the same way.

“The treadmill is accelerating (and you can’t step off), “crushing awareness that no matter how hard you work, you will never catch up […]. This creates a psychological trap. You know you need to keep learning, but the volume of things to learn is genuinely impossible to absorb.”

“Everyone is faking it (and that’s actually fine). The imposter syndrome in AI is universal. The field is moving so fast that expertise in one area doesn’t translate to expertise in another, and the moment you feel confident about something, it’s probably already changing. I realized this when I stopped trying to know everything and started getting comfortable with knowing enough.”

I’ll share my recipe to overcome this more in depth at the end of this post. But the way I deal with this anxiety myself is by focusing on learning first principles so I can understand the foundation and derive all new advancements from them. I try to build my mind like a Lego of fundamental principles that I can compose to build my understanding.

And if you think about it, this makes total sense. Look at the most recent development and achievements in the space of AI. They all derive from the transformer architecture. If you have ML fundamentals, and you understand the transformer architecture, you can easily move into understand the more fancy concepts of MoE, RLVR, RL-HF, sparse attention, KV caches, and inference-time compute.

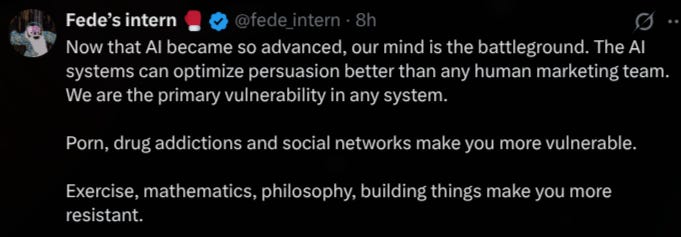

“Your edge isn’t technical knowledge. It’s taste. The skill of knowing when to use AI and when not to, when to trust the output and when to override it, when a capability matters and when it’s just impressive-sounding noise.”

“The fear never goes away. You just get better at using it.”

This taste is built by reading difficult books. Poetry. Watching films that don’t resolve neatly. Documentaries that connect you with experiences and cultures very different from your own. Leaving your phone at home. Having philosophical conversations that go nowhere. Going to museums. Standing in front of photography you don’t immediately get. The things that feel unproductive under the XXIs expectations, are exactly what make you better at this

In this fight against irrelevance, many are missing the ability (and opportunity) to think hard about problems and solutions.

“Vibe coding satisfies the Builder. It feels great to see to pass from idea to reality in a fraction of a time that would take otherwise. But it has drastically cut the times I need to came up with creative solutions for technical problems. I know many people who are purely Builders, for them this era is the best thing that ever happened. But for me, something is missing.

I’m not sure if my two halves can be satisfied by coding anymore. You can always aim for harder projects, hoping to find problems where AI fails completely. I still encounter those occasionally, but the number of problems requiring deep creative solutions feels like it is diminishing rapidly.”

Reading this prompted me to think about the last time that I really thought deeply because I was facing a hard problem that pushed me to a whiteboard and required a few hours of uninterrupted quiet time.

And you know what I realised? This has always happened when I got obsessed with a problem. And with the immediacy of everything, this is happening less these days. Which is sad.

Thinking deep comes from figuring out how to solve real problems with real solutions. While AI will be able to build the solution, you still need to think deeply about how that solution fits the problem, if there are better ways, and steer it towards a solution that satisfies what you want.

Let’s get this out of the way: I don’t think software engineering or computer science as a discipline will disappear any time soon, but those in the industry will definitely need to adapt. AI has introduced a higher-level of abstraction to software engineering. Traditionally, the software engineering role involved writing the code for the system being implemented, and this may no longer be the case.

Instead of using a programming language to describe the system’s logic, we use the English language.

The skills required to generate that software will change. We will all need to think like systems engineers and product managers. We’ll need to treat software modules and algorithms as black boxes that need to be assembled the right way. One won’t need to know how to implement merge sort, but will have to reason about its complexity and how the algorithm fits its problem.

Coming from an electrical engineering background, this has been the case for some time there. I wouldn’t be able to implement a shift register myself (well actually, with the right I may), but I know how it works and when to use it. We treat electronic components as black boxes. This is the shift that software engineering is suffering.

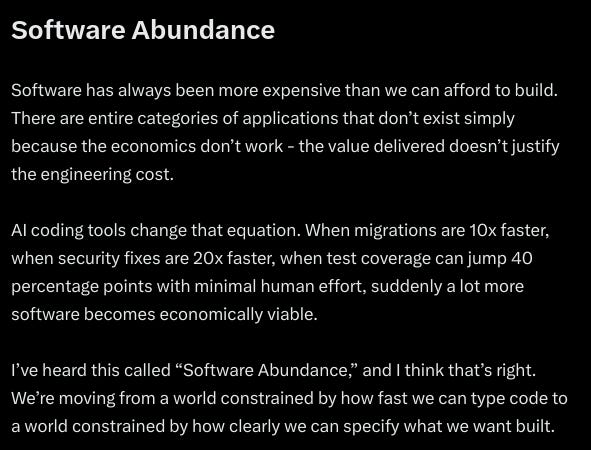

“Every abstraction layer – from assembly to C to Python to frameworks to low-code – followed the same pattern. Each one was supposed to mean we’d need fewer developers. Each one instead enabled us to build more software.

“The barrier being lowered isn’t just about writing code faster. It’s about the types of problems that become economically viable to solve with software.”

“The pattern is so consistent that the burden of proof should shift. Instead of asking “will AI agents reduce the need for human knowledge workers?” we should be asking “what orders of magnitude increase in knowledge work output are we about to see?”

“The paradox isn’t that efficiency creates abundance. The paradox is that we keep being surprised by it.”

I think we can all agree by now, software is not dead.

“The lesson here is that whatever the world thought would end just ended up being vastly larger than anyone thought. And the thing that people thought would forever be replaced was not simply legacy but ended up being a key enabler.”

The Internet was going to kill retailers, and look how healthy Costco and Walmart’s businesses are looking today. Local stores definitely took a hit, it was a massacre but nothing existential. It forced local stores to adapt to what the Internet entailed. This is what I think is going to happen with AI and software engineers and companies.

“AI-enabled or AI-centric software is simply moving up the stack of what a product is”. Think of how finance has changed since the early 90’s and what you can do now thanks to the Internet.

Domain experience will be wildly more important than it is today because every domain will become vastly more sophisticated than it is now. I’ve been saying it for some time now (and seen others saying it). Context is king in the age of AI (this applies from models to people).

We’ll need to learn how to adapt, and think of the things that won’t change in the coming decades to escape the hype if we are building for the future (note to founders, including myself). People will still need to keep eating, pay for stuff, and move things around.

If we don’t want to become irrelevant, we need to try to avoid delegating all our skills and knowledge work to AIs. This is what this post from the team at fast.ai advices in this post presenting the dark side of vibe-coding.

“The results of vibe coding have been far from what early enthusiasts promised. Well-known software developer Armin Ronacher powerfully described some of the issues with AI coding agents. “When [I first got] hooked on Claude, I did not sleep. I spent two months excessively prompting the thing and wasting tokens. I ended up building and building and creating a ton of tools I did not end up using much… Quite a few of the tools I built I felt really great about, just to realize that I did not actually use them or they did not end up working as I thought they would.”

They even do a great analogy of vibe-coding to gambling:

-

Vibe coding does not provide clear clues of how well one is performing (and even provides misleading losses disguised as wins).

-

The match between challenge level and skill level is murky.

-

It provides a false sense of control in which people think they are influencing outcomes more than they are.

The US delegated manufacturing to China at the beginning of the century and now they are trying to recover from the second order consequences. In the same way, we should be wary about delegating everything to AIs.

People who go all in on AI agents now are guaranteeing their obsolescence. If you outsource all your thinking to computers, you stop upskilling, learning, and becoming more competent,

If we accept that software isn’t dying but evolving, the question becomes: how do we evolve with it? Here is the recipe I’m using to keep fear at bay and stay relevant:

1. Double down on First Principles and become a generalist. Understanding how things work, why they work, and the inevitable trade-offs involved is now more important than ever. If you understand the underlying principles, architecture, and core technology, the specific tool you use to build it matters less.

Additionally, I think that people that know the foundations of several fields well, will be able to come up with more creative ideas, and thrive on a world of narrow thinking.

2. Reclaim the time to think deeply. Short-form video content, and the instant dopamine boosts we now get from tools like Claude Code, might be some of the worst inventions of the 21st century for our cognitive health. They train us to expect immediate resolution.

To counter this, I am intentionally slowing down. Reading long, difficult books. Having hard conversations that don’t have clear answers. Dumping raw thoughts into a physical notebook. Walking in nature, meditating, or simply sitting with a problem without reaching for a device. These habits, which feel “unproductive” by modern standards, are exactly what build the mental resilience required to solve complex problems.

3. Aggressively invest in your own adaptability. Technology will get obsolete in months (sometimes weeks), but the ability to learn and understand a new one won’t be. You’ve probably heard this already.

People who go all-in on AI agents now to avoid the hard work of learning are guaranteeing their own obsolescence. If you outsource all your thinking to computers, you stop upskilling. Use AI to move faster, not to skip the gym. This is way learning the core principles is more important than ever in order to help you build your knowledge and understanding from them.

4. Cultivate taste over speed. We must identify real problems. In a world where building is cheap, deciding what to build and where to spend your time is the premium skill. Aesthetics will be increasingly relevant, as AIs produce more and more look-alike outputs, adding the beautiful and sloppy human touch will be highly appreciated (take this sloppy post as an example 🙂 ).

5. And have fun! Always have fun with what you do, and if you are miserable try to find something else. We spend half of our life working, so you better make it your hobby to make it more fun.

As a good friend of mine always tells me: “we live interesting times”. The future belongs to those who can use AI to build, but rely on their own deep human experience to decide what is worth building.