This week’s newsletter is sponsored by Buf.

Stop Breaking Your APIs: Workshop on Protobuf API Governance

As organizations grow, the cost of a single “minor” API change can lead to hours of cross-team debugging. Developers often hit a wall with JSON, it’s slow, bulky, and lacks the strict types needed for reliable microservices.

Protocol Buffers (Protobuf) is the industry standard for type-safe, compact data handling, but managing it across teams can be complex. Buf makes it easy.

Join Buf’s live online workshop on Feb 19 to learn how to:

-

Standardize APIs: Eliminate the friction between your backend, web, and mobile teams.

-

Prevent Breaking Changes: Catch errors before they hit production.

-

Boost Velocity: Auto-generate code and documentation so your engineers can focus on shipping features.

The session includes a live Q&A throughout to help you rethink your API strategy for 2026.

Thanks to Buf for sponsoring this newsletter. Let’s get back to this week’s thought!

With the speed of writing code increasing due to AI coding tools, skills like critical thinking, pure problem-solving, and being good at reviewing/verifying are more important than ever.

But what’s alarming is that a lot of engineers increase the speed of the pure output, without verifying if the output is actually of the right quality.

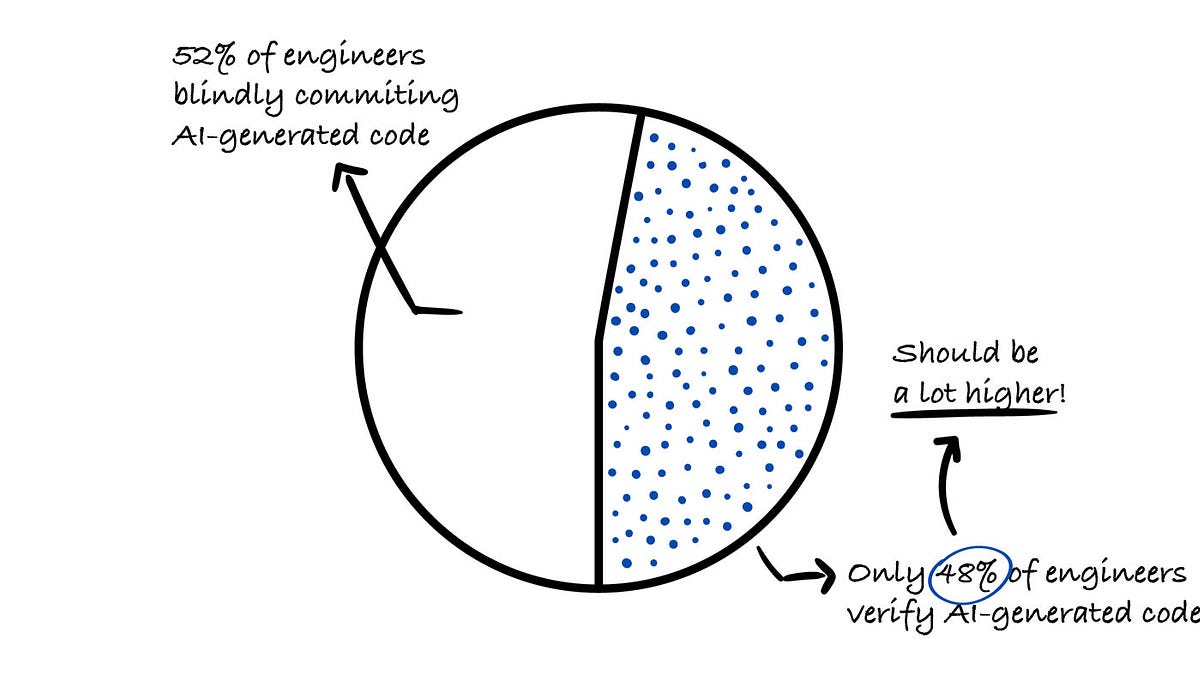

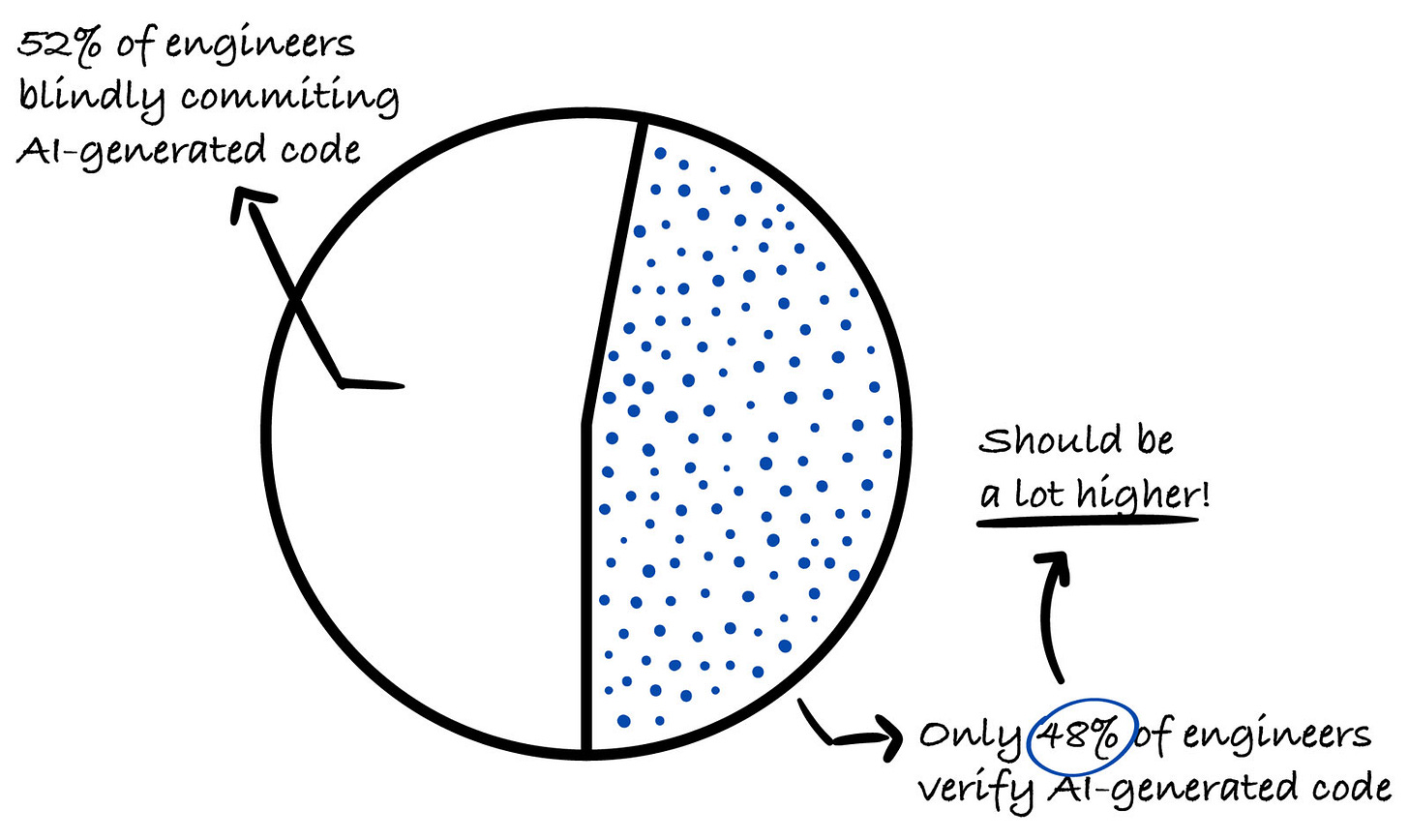

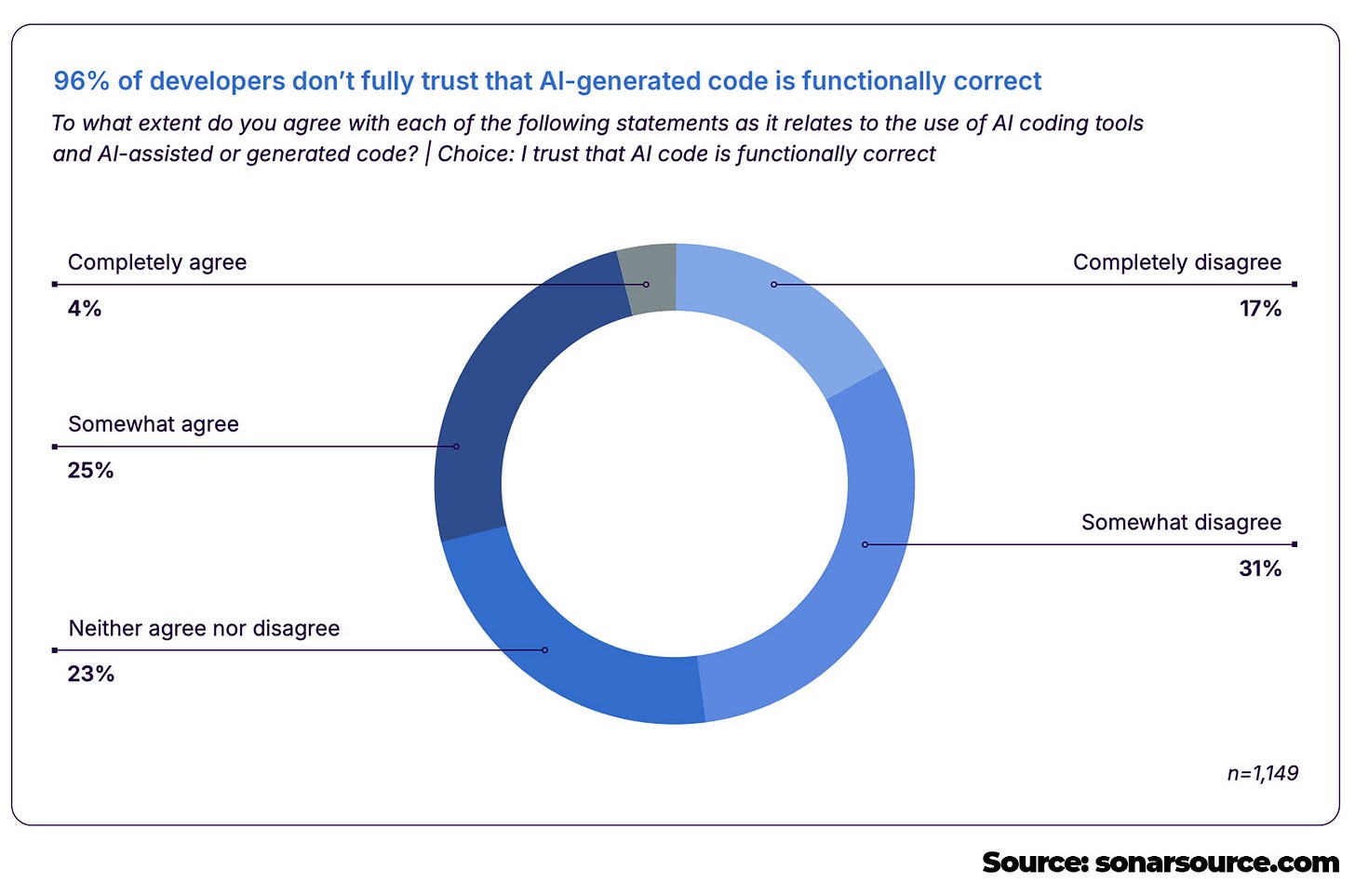

Based on the recent State of Code Developer Survey Report from Sonar, a lot of engineers (96%) don’t trust the output, which is a decent number, but only 48% verifies it, which is alarming in my opinion.

Some other interesting data from the report:

-

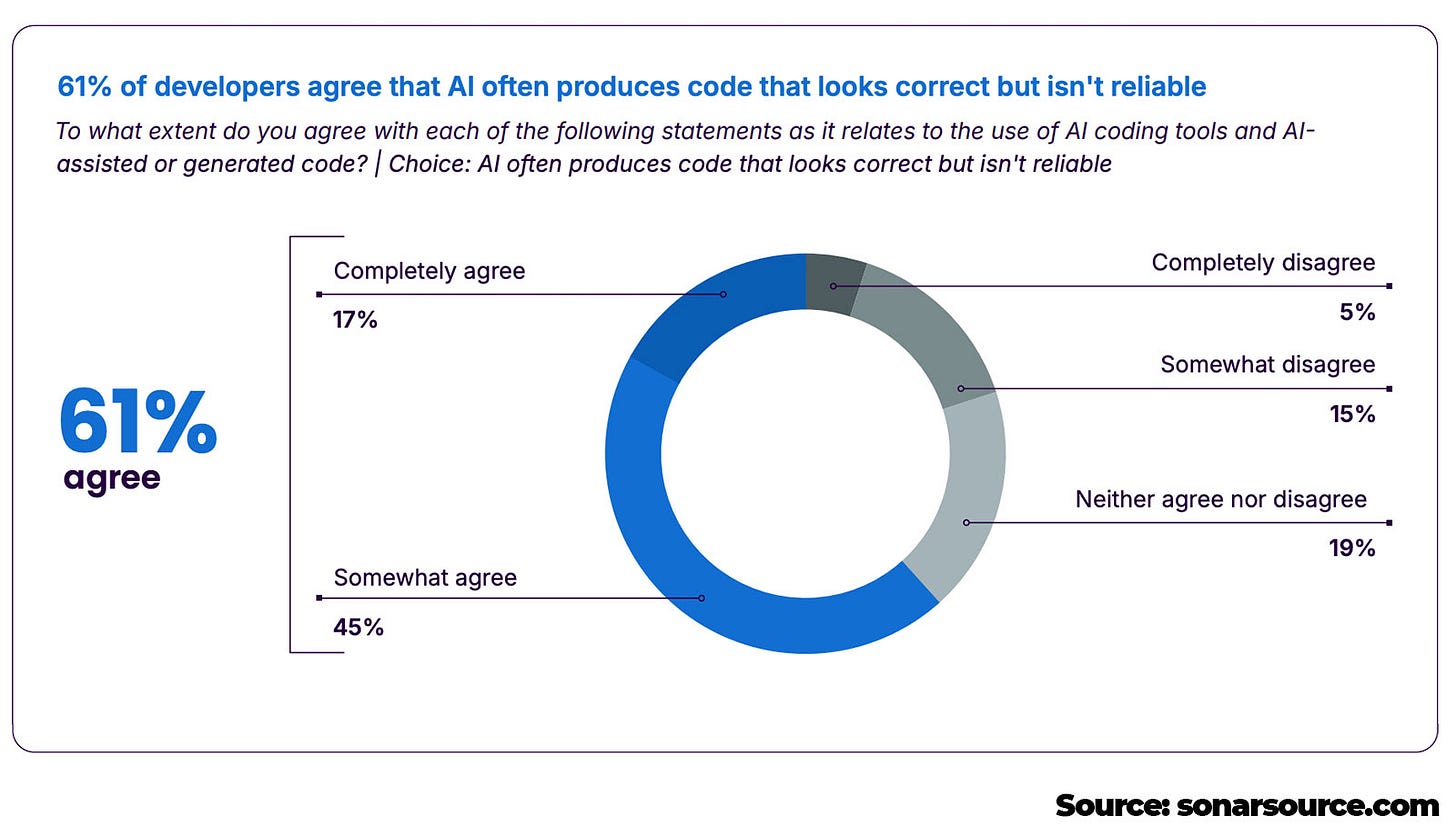

61% agree that AI often produces code that looks correct but isn’t reliable

-

57% of engineers worry that using AI risks exposing sensitive company or customer data

-

35% of engineers use AI via personal accounts

I’ve taken a close look at the report, and in this article, I am sharing the data of the most important parts, and my thoughts on them.

If you like these kinds of articles, where I go over a highly relevant report and share my insights on it, you’ll also like these articles:

Let’s start!

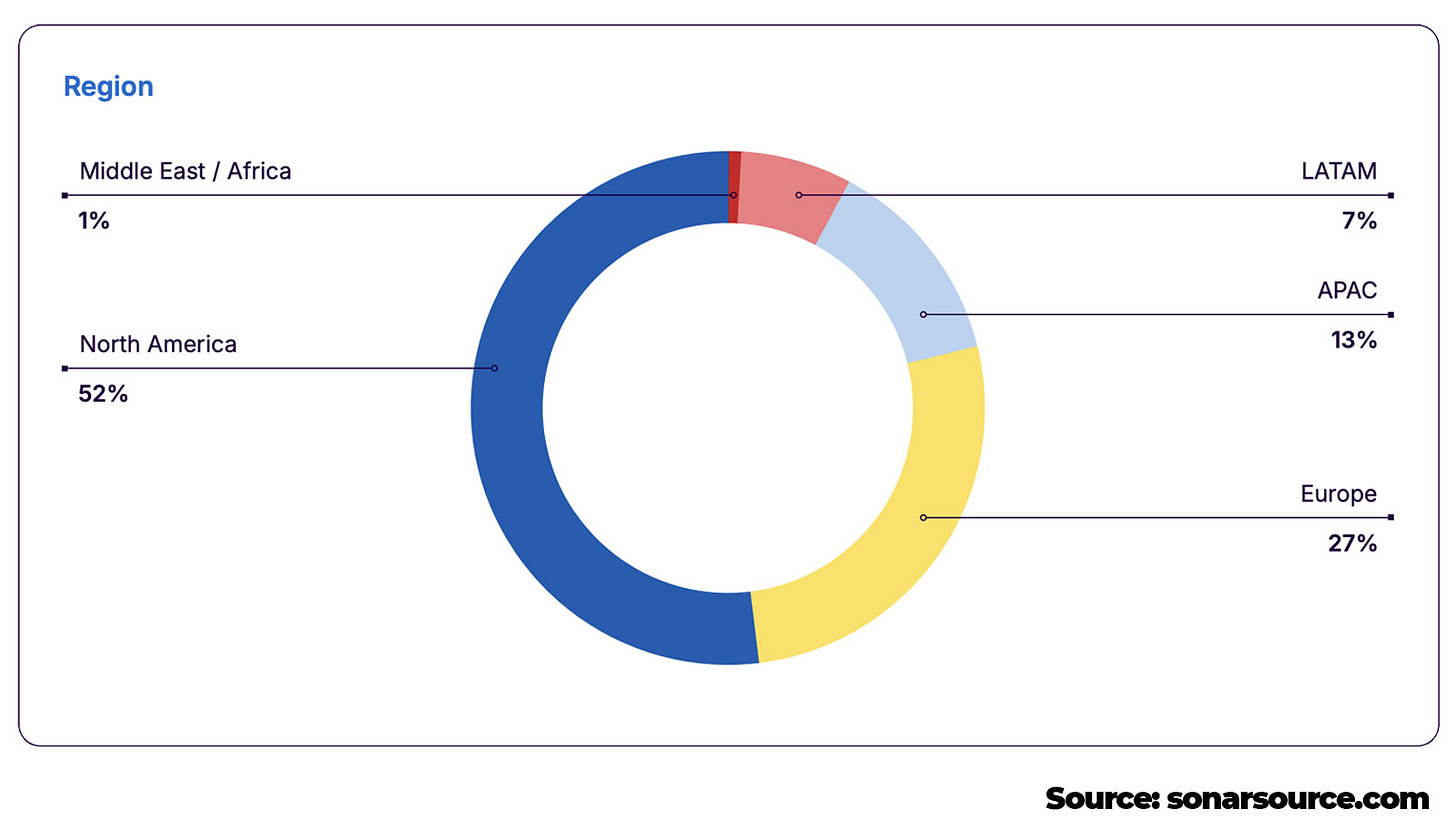

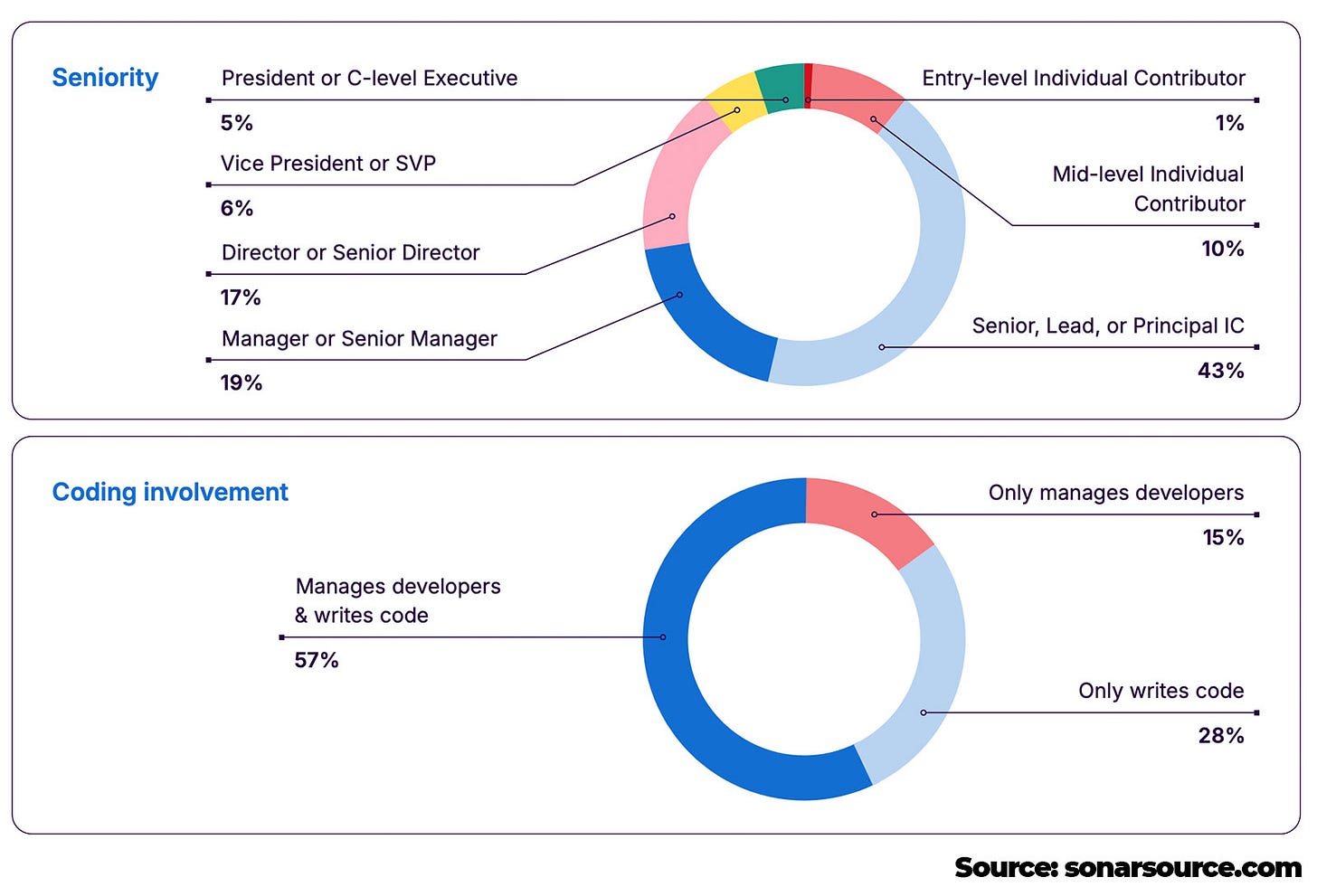

The survey is based on 1149 respondents, either full-time employed or self-employed in a tech role, with the majority working in software engineering.

All of the respondents either write code or manage engineers and had used AI as part of their job within the year 2025. The survey was conducted in October 2025.

Let’s start with how engineers are using AI in current times.

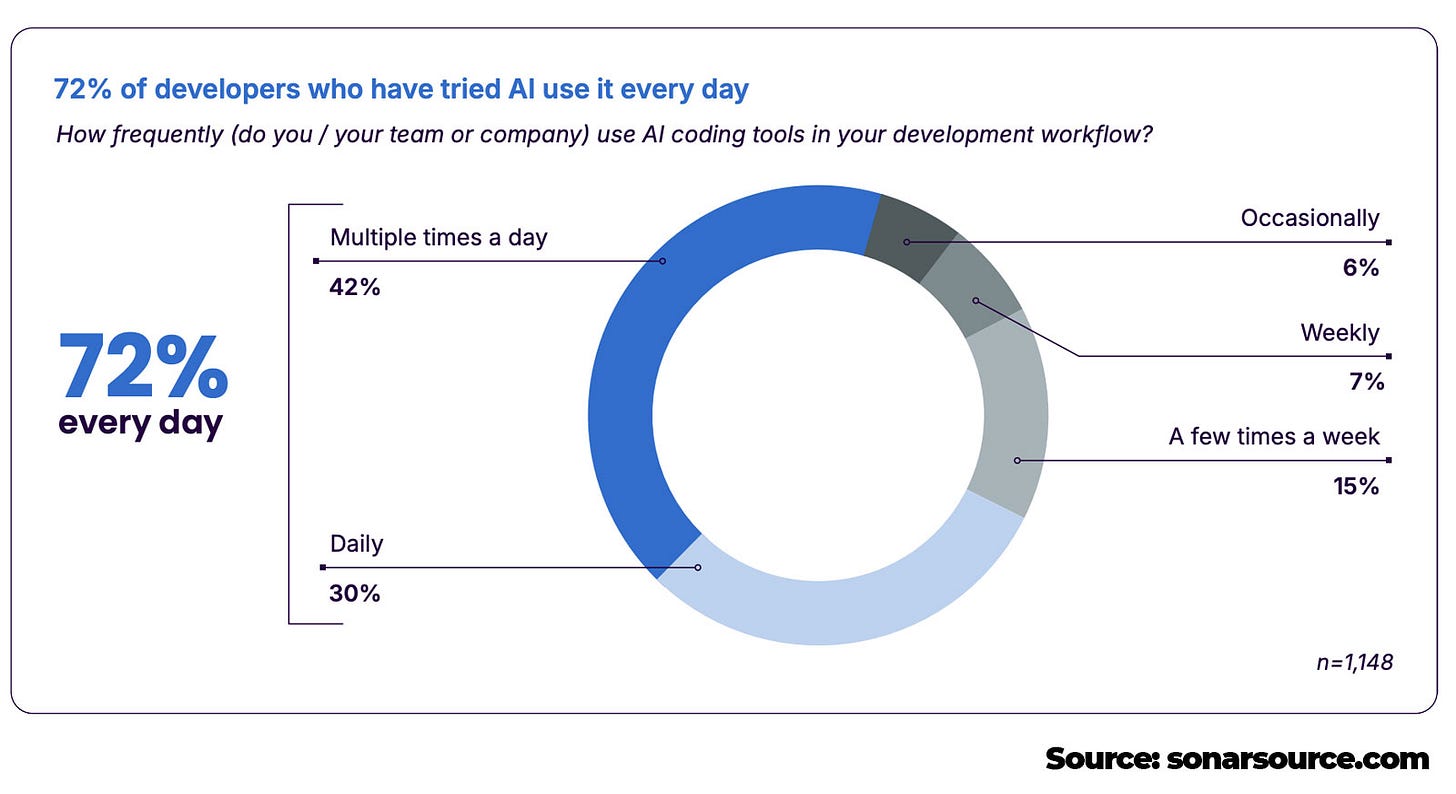

There is no surprise here, except I’d even expect the number to be higher. AI-assisted engineering is the best way to build software these days, and I definitely recommend that everyone use it daily.

Take a look at this article, where you can find a prompt that’ll help you to be more productive with software engineering tasks:

This data also confirms my thoughts that I mentioned above.

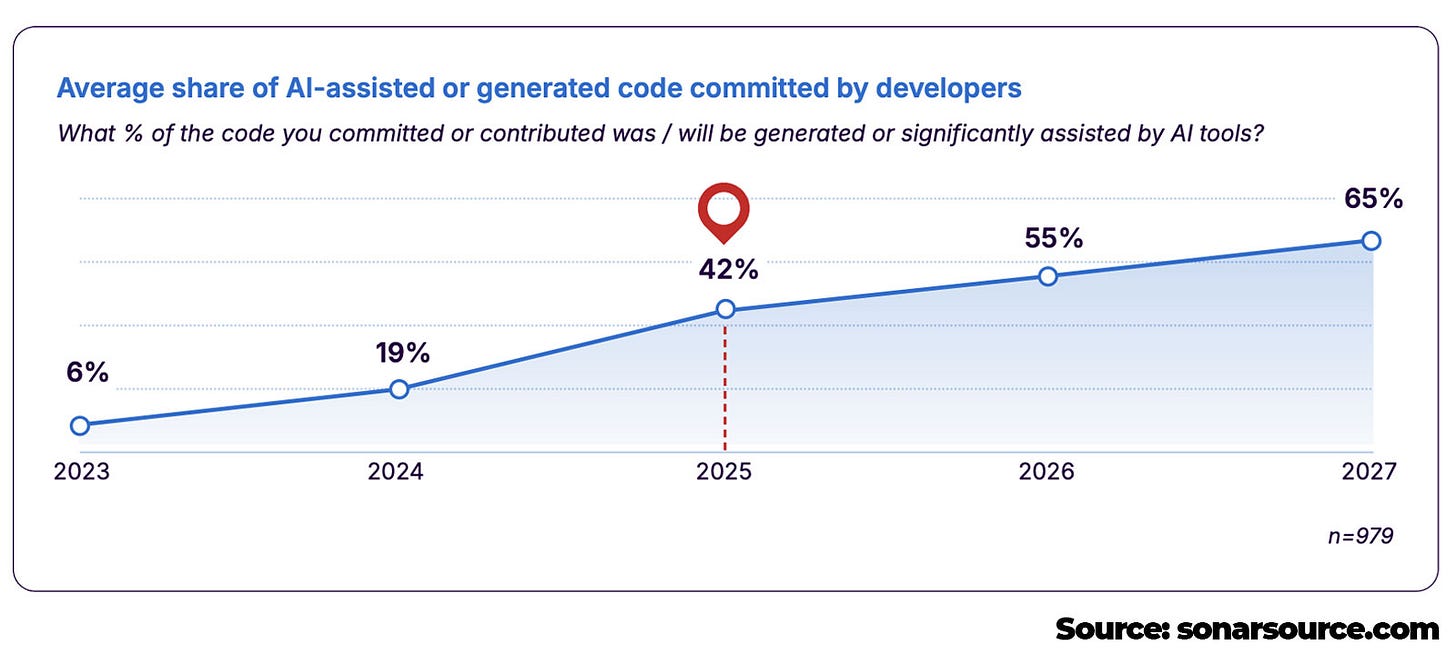

AI-assisted engineering is just going to be more and more important, and as we can see from the data, engineers surveyed expect that AI-generated code should rise from 42% (today) to 65% in 2027.

Which, I agree with, and also what I am hearing based on discussing with many engineers and engineering leaders across the industry. Especially in a recent discussion with the Engineering Lead from the OpenAI Codex team (deepdive coming soon).

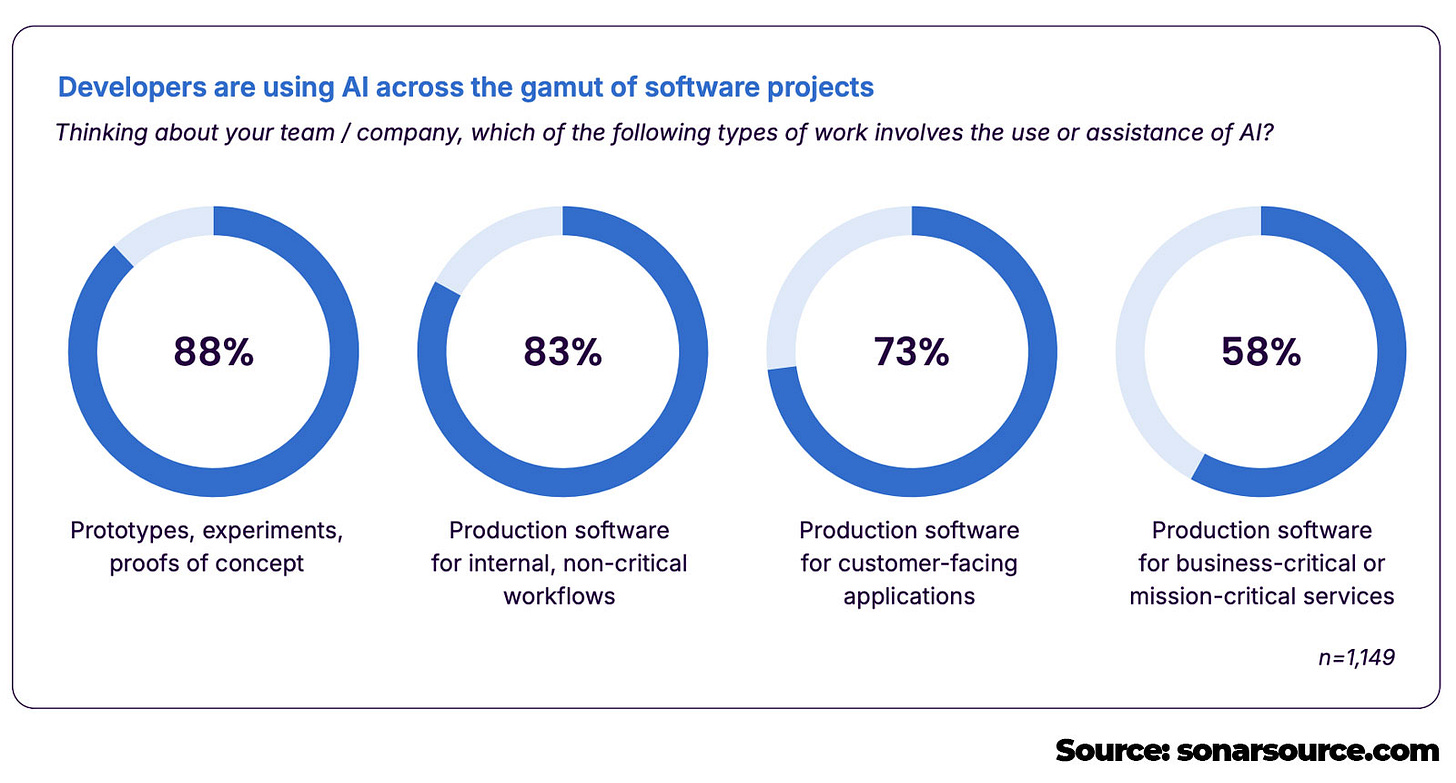

Prototypes and production software for non-critical workflows are where AI is used the most. And then also customer-facing apps and business-critical services are not so far behind.

Especially, what I am hearing a lot from different engineering leaders is that building internal tools that make everyone more productive is a really great use case for AI.

You can quickly build an internal tool, without focusing on quality as much, since it might not be needed in a couple of months anymore.

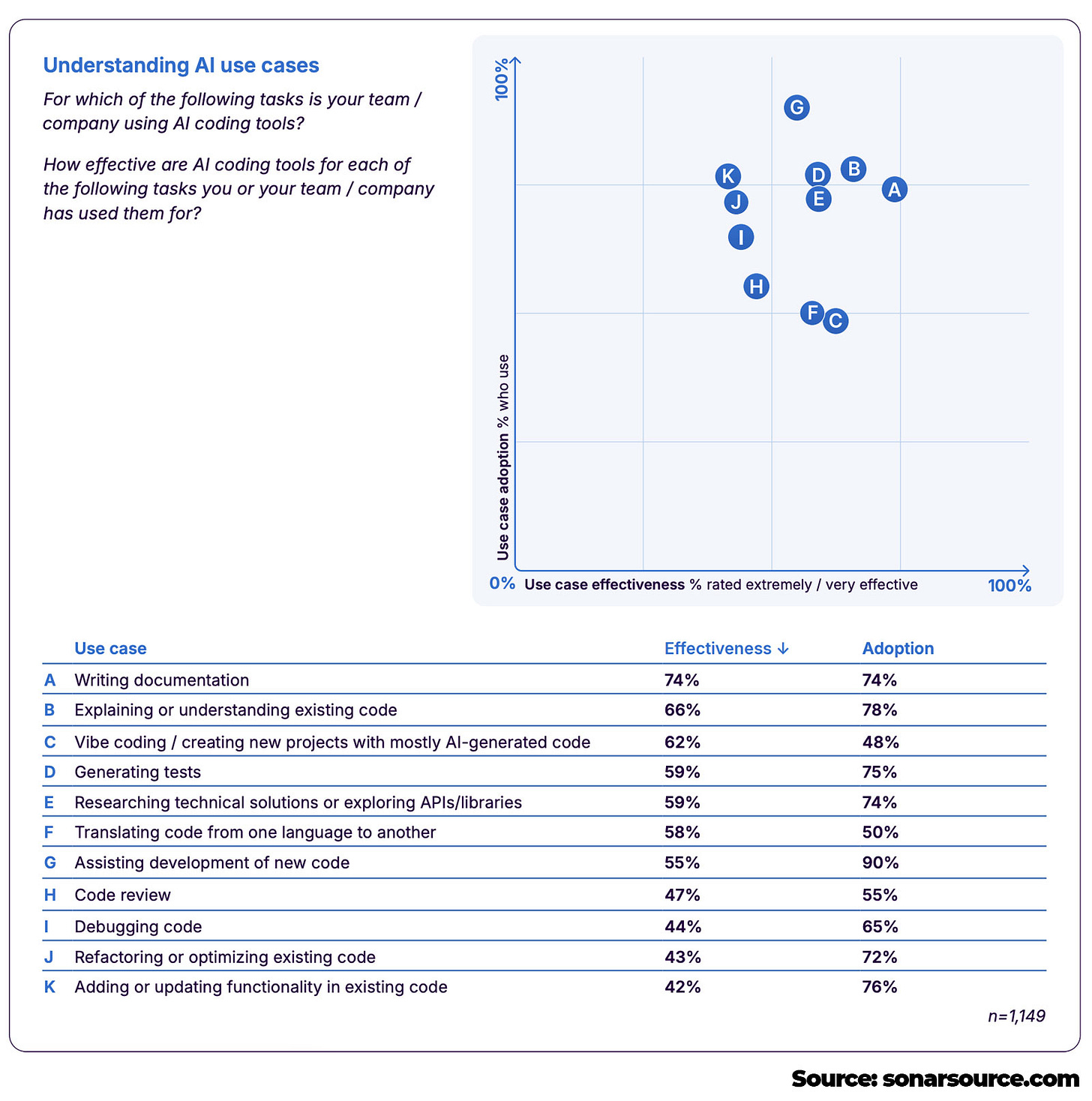

Writing documentation is the #1 use case, followed by explaining and understanding existing code.

I guess the options to choose from were more engineering-related, since I definitely miss note-taking of meetings and also summarization of threads or messages.

Those 2 use cases have been mentioned to me by a lot of engineers and engineering leaders. To find out more use cases, I definitely recommend reading these 2 articles:

Let’s now go to a very important part of the report, and that is: how trustworthy is AI’s output.

I would like to see this number be 100%, as you should never fully trust AI-generated code, at least not at this time. You should always check if the outputted code is what is expected, and not just blindly trust.

I saw too many engineers just blindly creating PRs with poor AI-generated code, which can then be quite problematic for reviewers.

The accountability should always be on the engineer who is using AI and not on AI. That’s very important to understand.

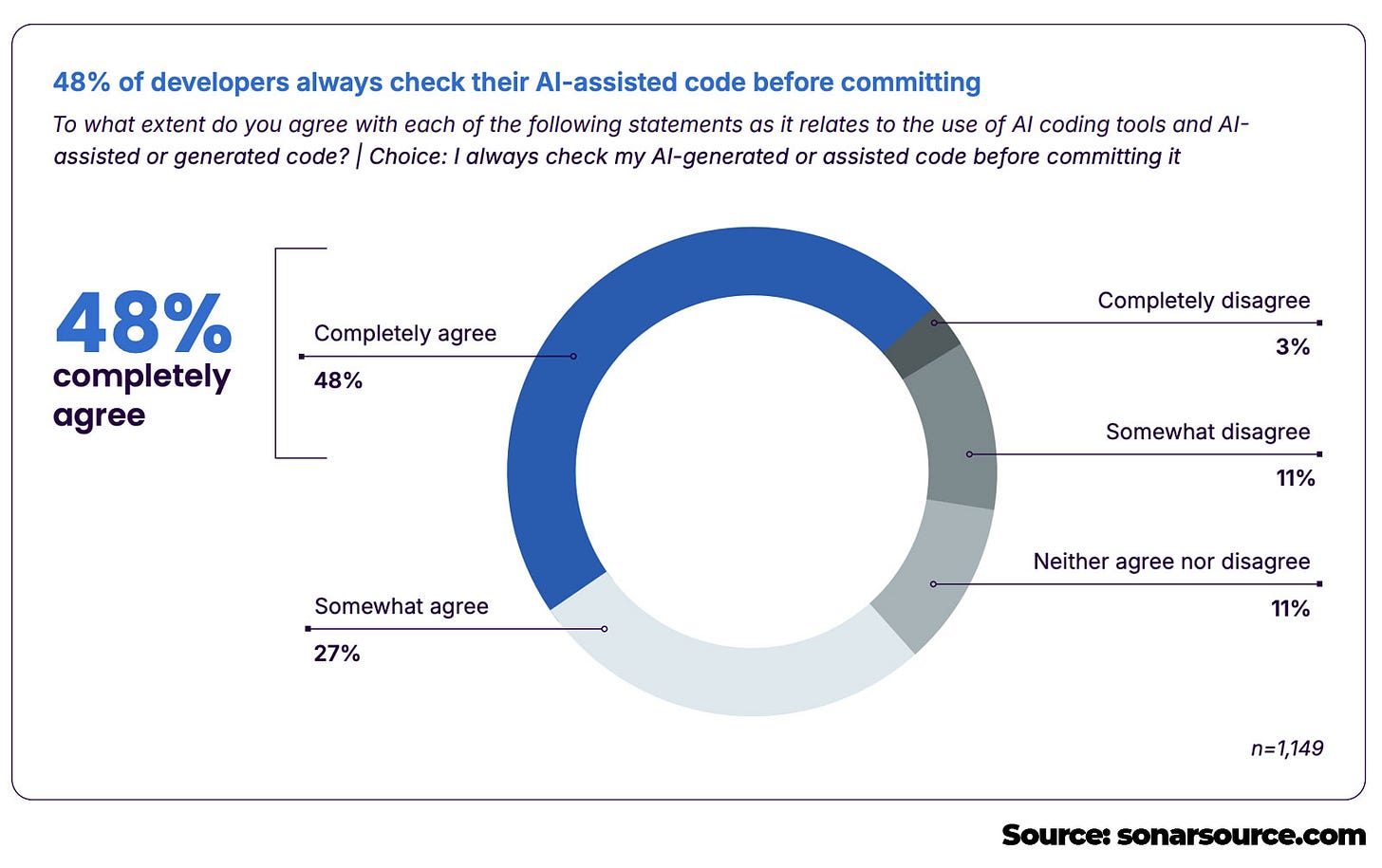

Now, this is the most alarming data from the report. Only 48% of engineers always check their AI-generated code before committing.

This is the exact reason why there are so many bad PRs being opened and why many engineers may hate AI in general. A lot of engineers use AI as a source of truth and do not take full accountability of the code.

Rather, just submit a PR with bad quality code and let the reviewers do the heavy lifting to check all the code. From talking with many engineers, and also from my experience, this is really frustrating.

So, make sure to check your code before submitting a PR. If you submit a PR full of AI-generated slop, you’ll lose a lot of credibility in my eyes.

Again, I would love to see this percentage a bit higher, as it’s really important to ensure that the code is going to be reliable.

You do it either by writing more accurate prompts, adding additional context, constraints, and guardrails. And of course, check the output yourself and ensure it’s on point.

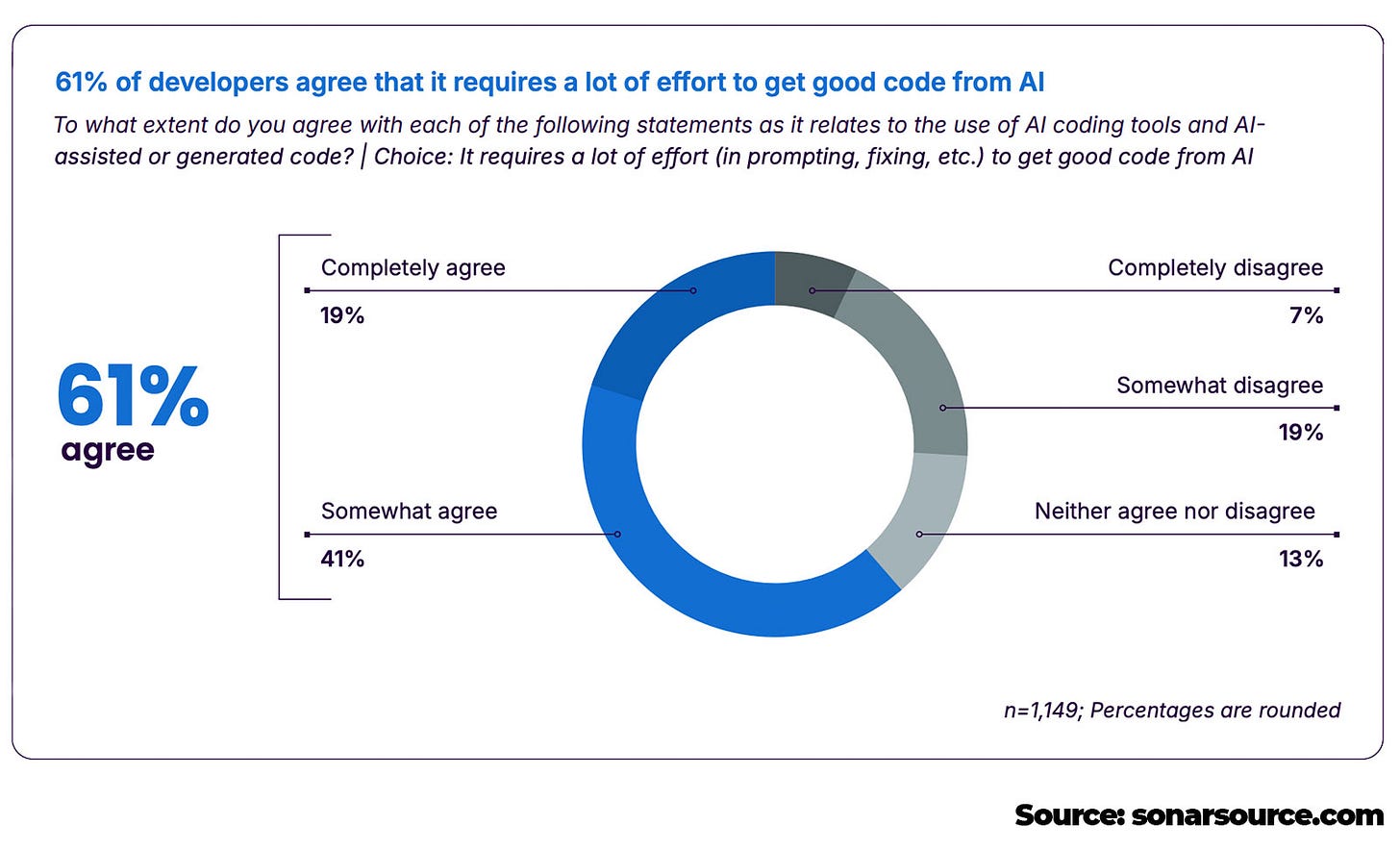

I agree with this, it depends a lot on what technologies you use and also how complex your codebase is. Technologies depend a lot.

A good example I am hearing is that a lot of startups these days decide to go with the Ruby on Rails framework to build their MVP.

And the reason for this is that it’s a very opinionated framework, and you have clear guidelines and design patterns. While in most of the JS frameworks, there’s a lot more flexibility.

AI’s output works best when you have as many guardrails and constraints as you can. Angular framework works well as well.

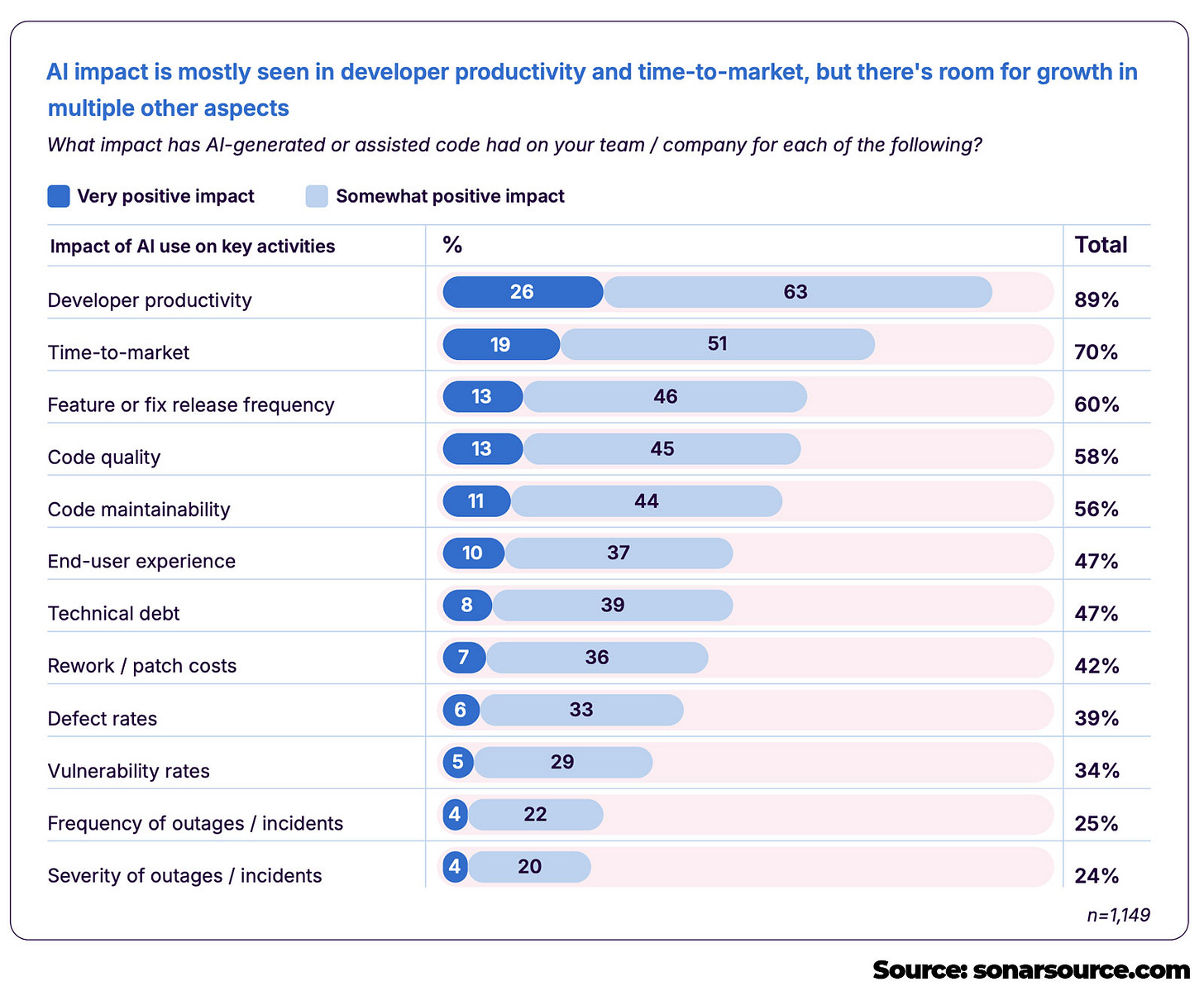

I would love to see the increase in feature or fix release frequency, code quality, and code maintainability as well! And I think, next year, those are going to be higher numbers.

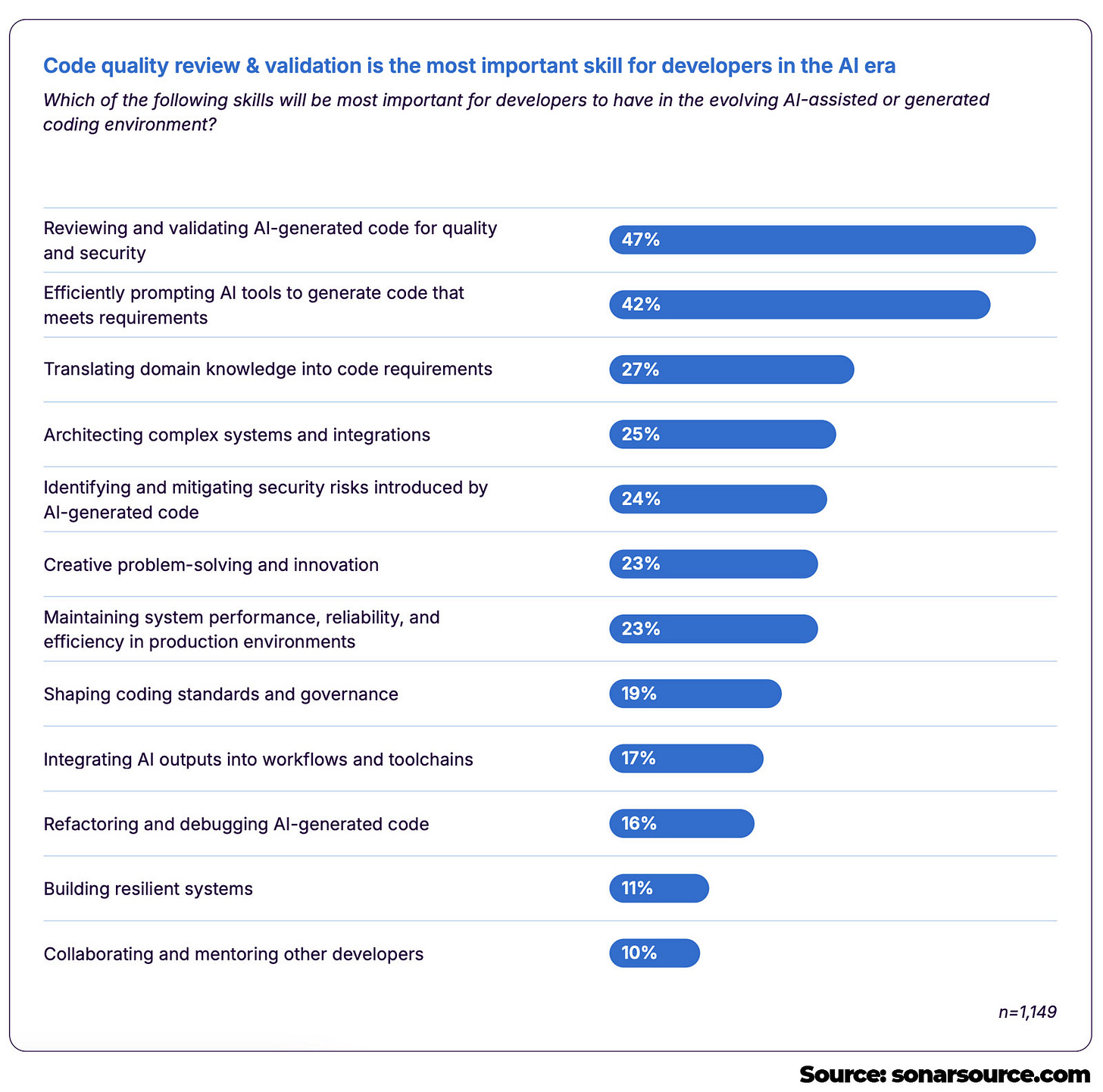

Based on the data, we can see that reviewing and validating code is the #1 most important skill, while also efficient prompting is very important as well.

To learn how to prompt well, read this extended guide I did together with OpenAI’s Product Lead Miqdad Jaffer:

And last, but not least, let’s take a look at the top AI tools and how engineers are using them.

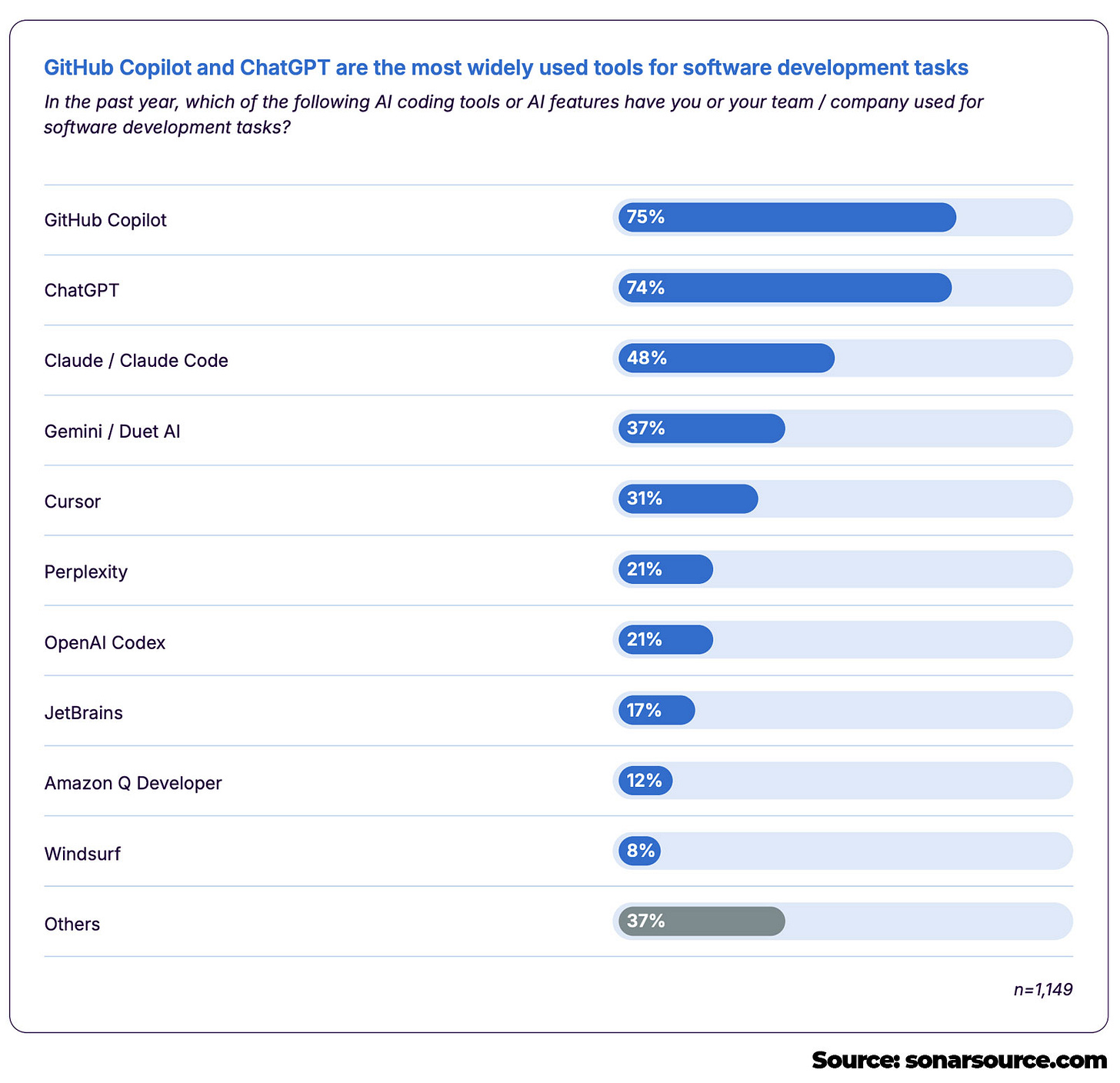

A bit surprised that GitHub Copilot is on top, but I get it, it’s been one of the first AI coding tools available, and it’s used heavily among VS Code users.

And also ChatGPT is of course no surprise. Followed by Claude Code, Gemini, and Cursor. I think Claude Code today would be a bit higher, and OpenAI Codex would be higher as well.

There has been a lot of good feedback on Codex from engineers I talked to, and I tried it as well and liked it. The Codex app doesn’t give you the full IDE (which I miss), but overall, it gives you so many possibilities that you can use it for literally any task you are working on.

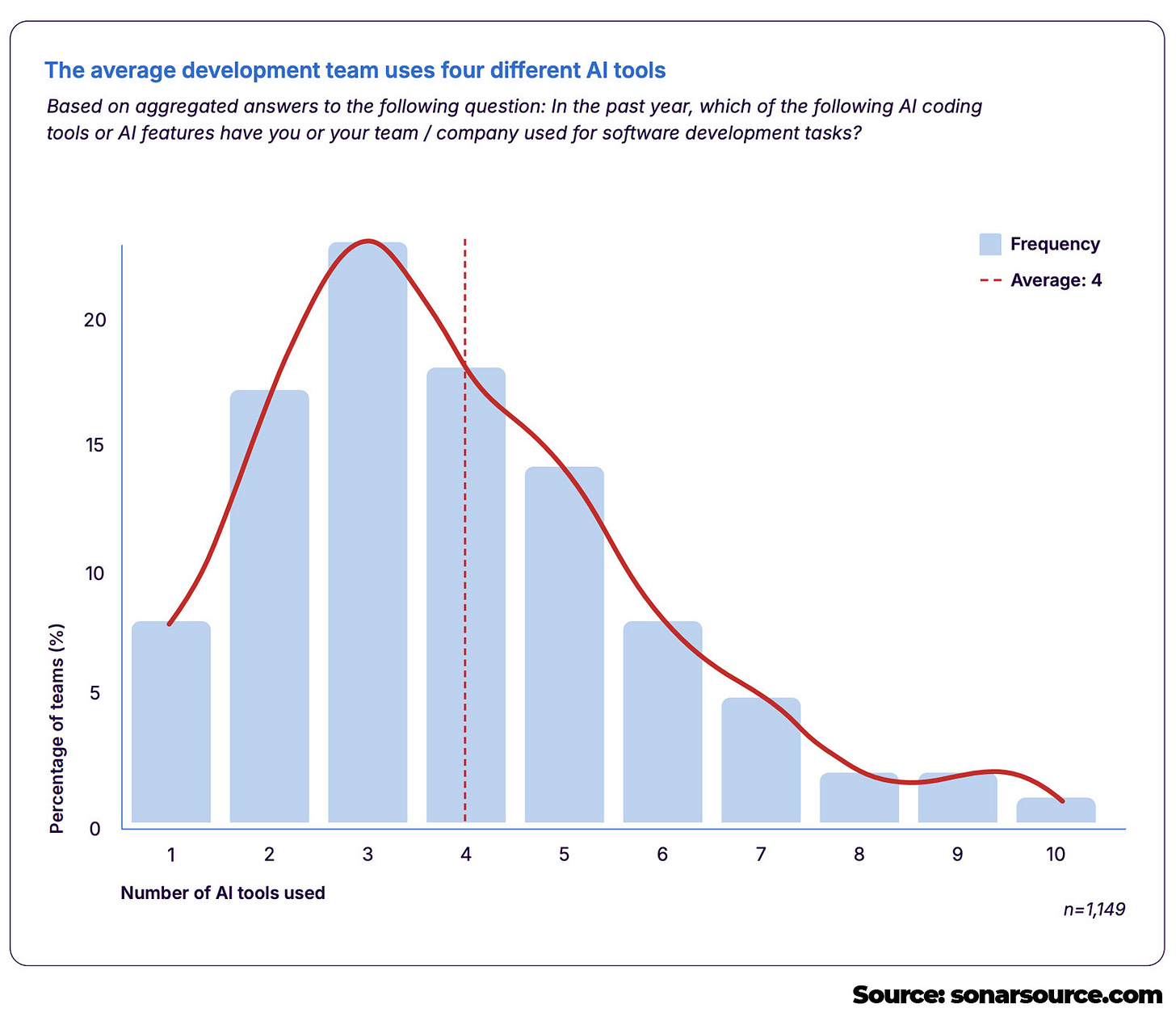

Seems like the most common are teams that use 3 AI tools, while the average is 4. From my experience, a range of 2-5 is a good range, and the data confirms it.

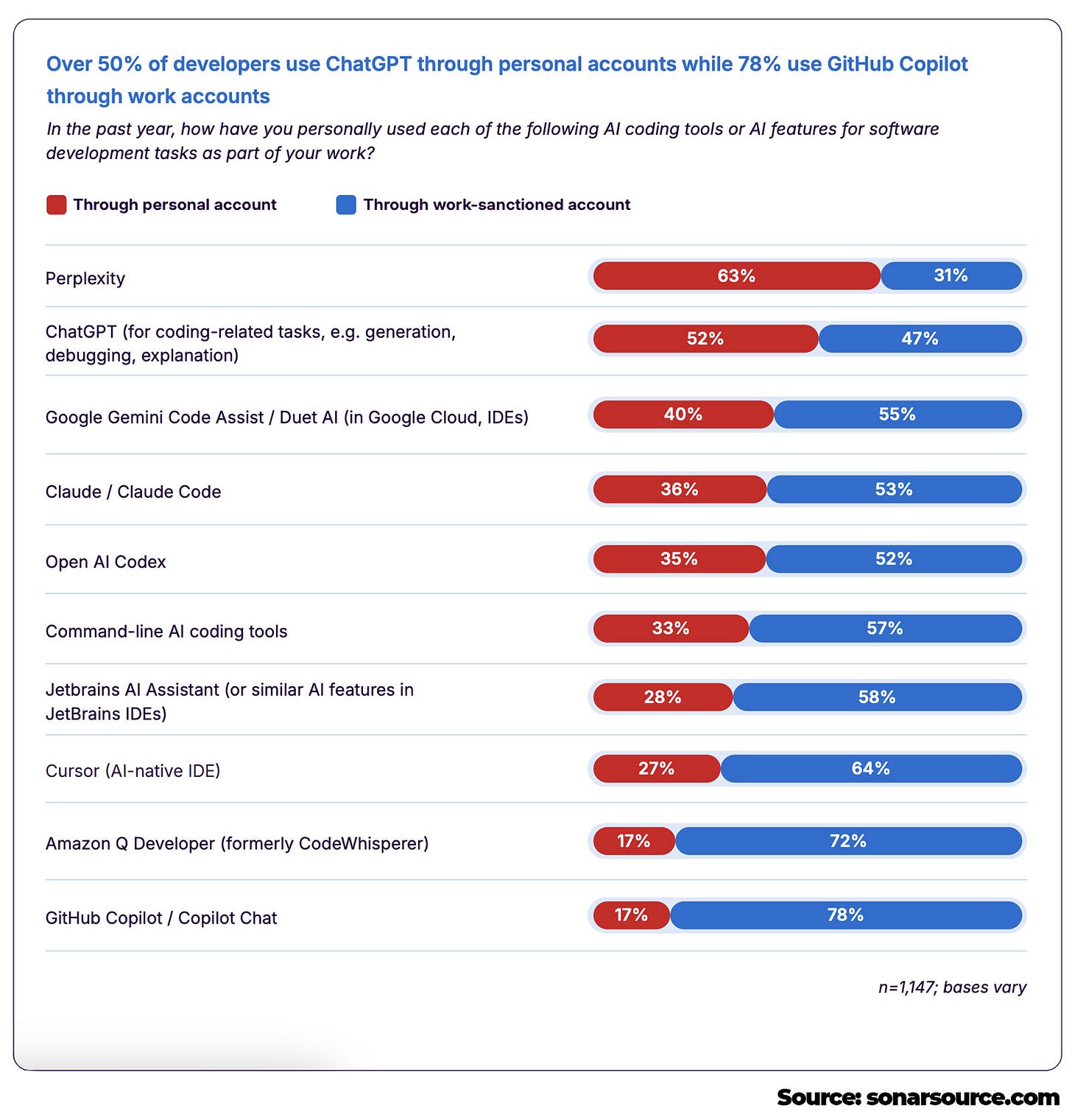

This is something that companies need to do better. It’s crucial to give your people the tools to be successful. Over 50% of engineers use ChatGPT through their personal account, and there is a high percentage on other tools as well.

If you are an engineering leader, reading this article, make sure to focus on giving your engineers the tools they need. Of course, my recommendation is not just to focus on ALL the tools, but to give what the majority is asking for.

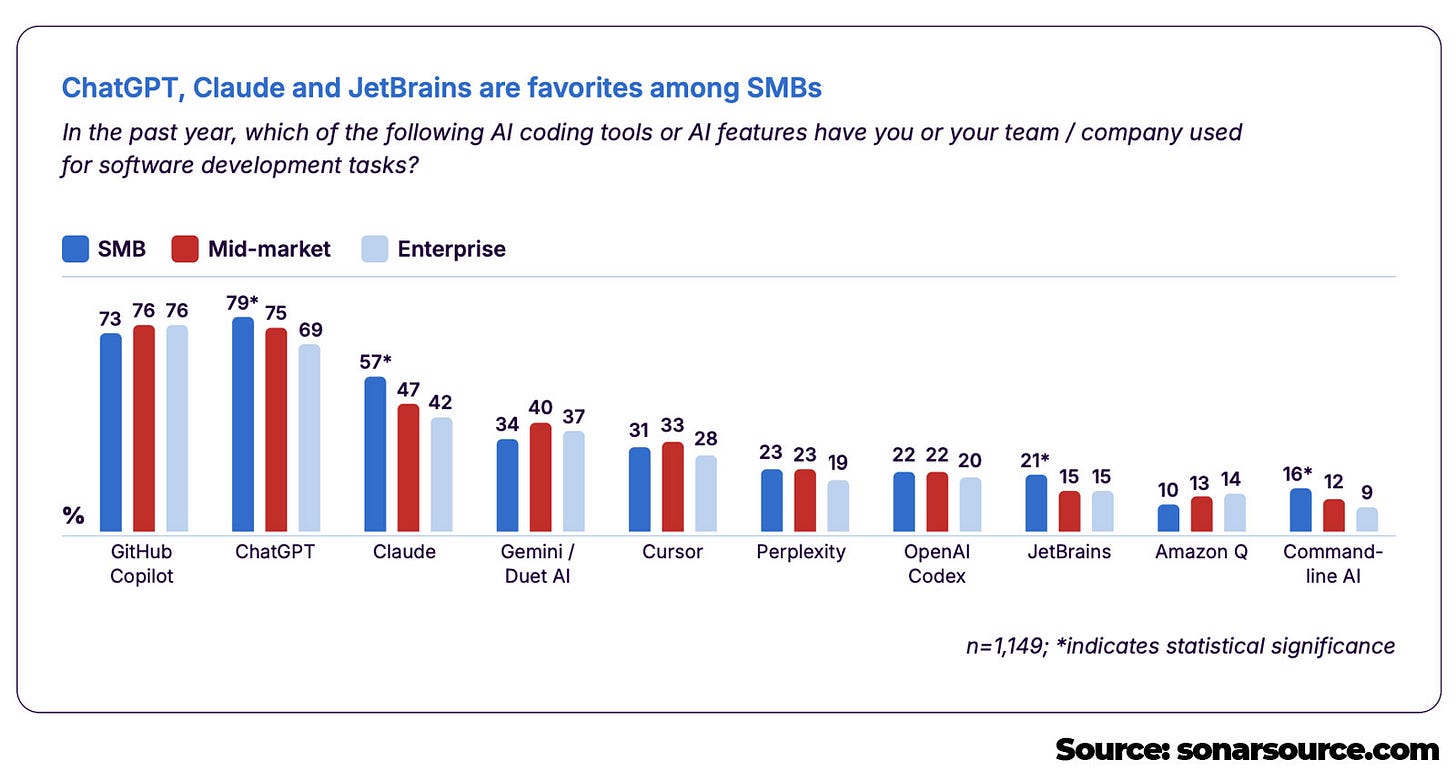

This is interesting data, especially regarding ChatGPT, and the larger the company, the less they use ChatGPT.

And also, we can see Claude Code being used the most in smaller companies.

There is much interesting data from this report, and one thing is certain:

Only 48% of engineers checking AI-generated code before committing is not enough!

We need to do better than this in our industry. Let’s end this article with the following:

The fastest way to lose your credibility is to blindly commit AI-generated slop, and even worse, if you blame AI for it.

The overall accountability should always be on you.

You got this!

Liked this article? Make sure to 💙 click the like button.

Feedback or addition? Make sure to 💬 comment.

Know someone that would find this helpful? Make sure to 🔁 share this post.

-

Join the Cohort course Senior Engineer to Lead: Grow and thrive in the role here.

-

Interested in sponsoring this newsletter? Check the sponsorship options here.

-

Take a look at the cool swag in the Engineering Leadership Store here.

-

Want to work with me? You can see all the options here.

You can find me on LinkedIn, X, YouTube, Bluesky, Instagram or Threads.

If you wish to make a request on particular topic you would like to read, you can send me an email to info@gregorojstersek.com.

This newsletter is funded by paid subscriptions from readers like yourself.

If you aren’t already, consider becoming a paid subscriber to receive the full experience!

You are more than welcome to find whatever interests you here and try it out in your particular case. Let me know how it went! Topics are normally about all things engineering related, leadership, management, developing scalable products, building teams etc.