So who wins the agent economy?

Wrong question.

Not who wins. Where does the mass concentrate? Mass attracts. Mass warps the field around it. And in every technology stack ever built, someone ends up fat and everyone else ends up thin. The question is which layer.

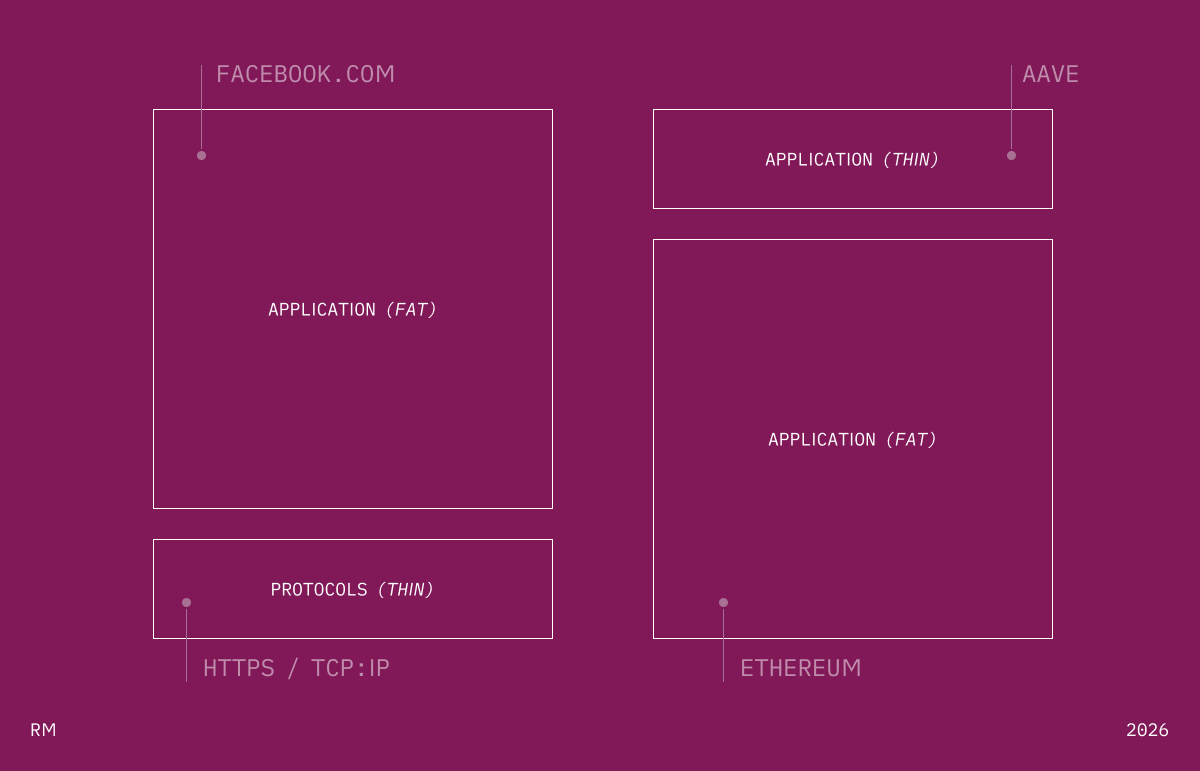

Joel Monegro tried to figure this out for crypto in 2016. The internet had thin protocols, fat applications. TCP/IP created immeasurable value — Google and Facebook captured it. The blockchain flipped it. Fat protocols, thin applications. One essay. One diagram. Somehow that affected an entire generation of investors how to deploy capital.

The thesis looked like this:

Simple. Powerful. And it raised an obvious next question.

The agent stack is forming right now. And it has the same structural question sitting at its center, unanswered.

Two lenses.

Codex (Openai), Claude (Anthropic), Gemini (Google). Siri (Apple(lol)). One model that does everything. That’s the thesis.

You talk to one agent. It writes your code, manages your calendar, researches your competitors, drafts your contracts, books your flights. It holds your context. It remembers your preferences. It has access to your email, your files, your bank. One relationship. One surface. One throat to choke.

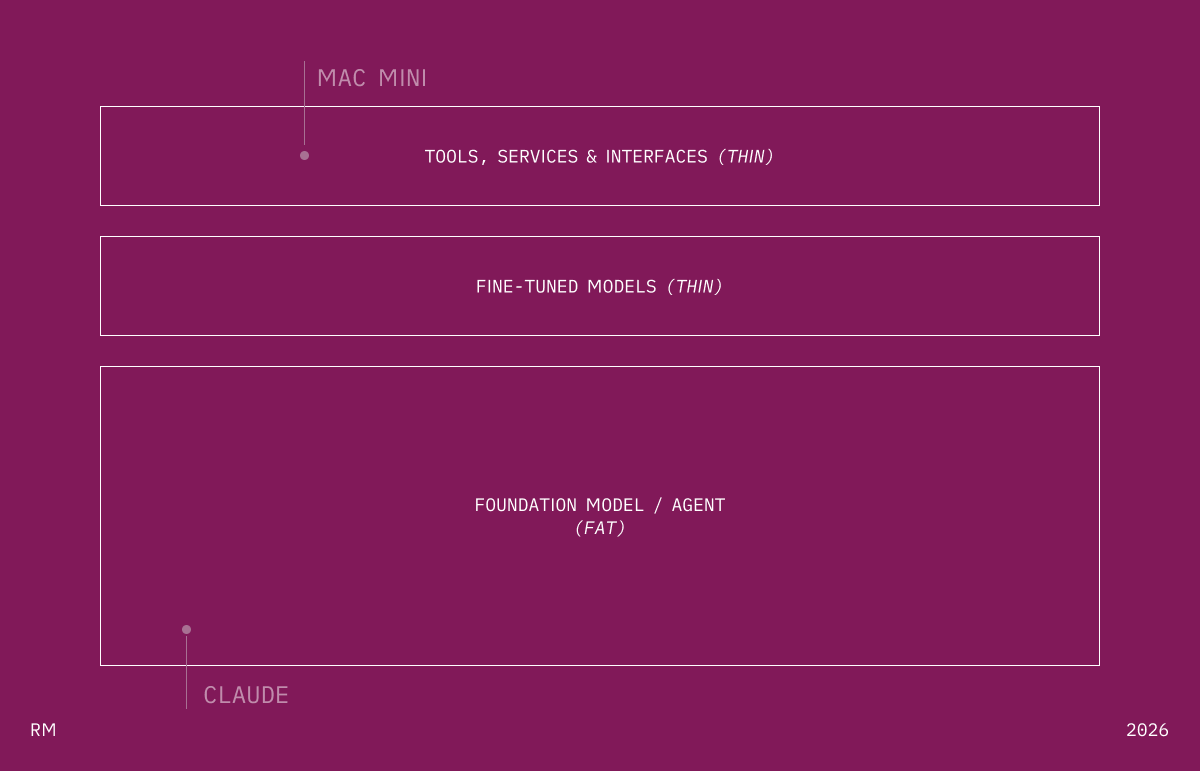

The foundation model provider is the platform.

The value stack looks like this:

Everything beneath the agent gets commoditized.

Tools, services, specialized models — interchangeable backends.

Swappable.

Disposable.

The agent decides what to use and when. The user never knows. Never cares.

Every capability gets absorbed. Six months ago, code generation was a startup. Image generation was a startup. Search was a startup. Deep research was a startup. Now they’re checkboxes in a model release blog post. Feature, feature, feature, acquired, deprecated, built-in.

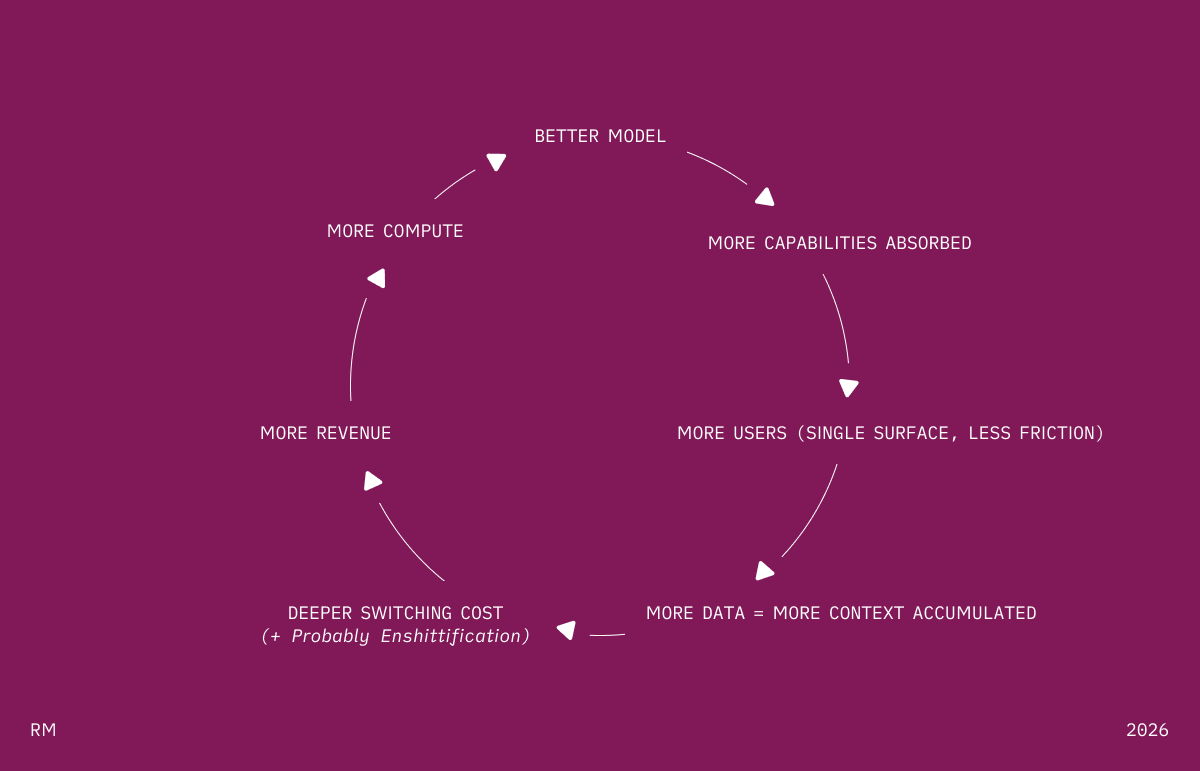

This runs on a flywheel:

Each rotation makes the agent fatter. Each rotation makes the layers beneath it thinner. The moats compound — training costs in the billions, user context that accumulates daily, and models that keep getting better at everything simultaneously, closing the gap on every specialist.

Ask yourself: why would you route a task to a fine-tuned 7B model when the frontier model is 95% as good and already has your entire life in its context window?

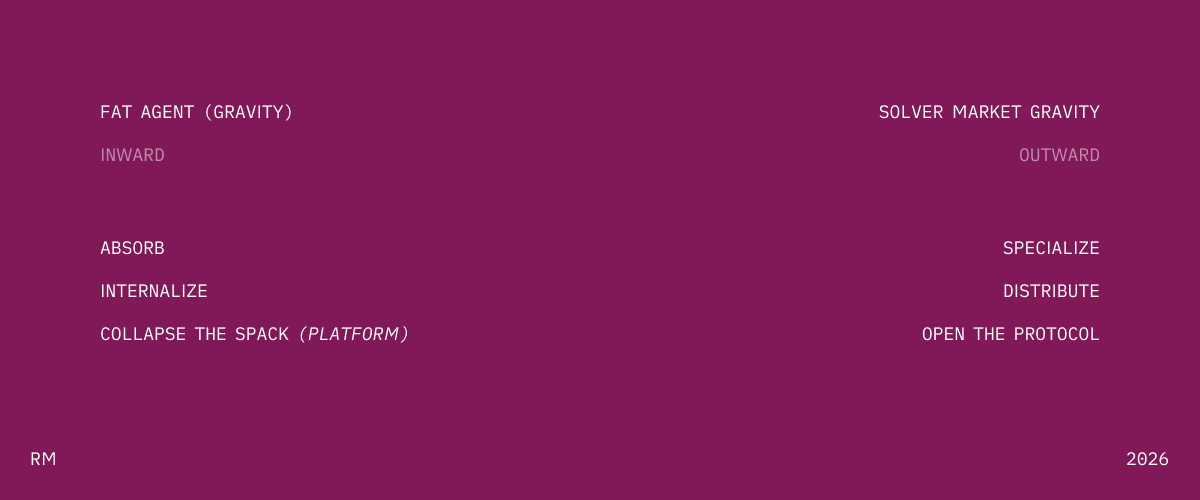

That’s the Fat Agent gravity. It pulls everything inward.

If this lens holds, the implications are brutal. Building a standalone AI product on top of a foundation model isn’t building a company. It’s building a feature that hasn’t been absorbed yet. You’re one model update away from the Coyote Problem — you look down and there’s nothing under your feet.

The winning move is to be the Fat Agent. Or to build something that makes the Fat Agent more powerful — infrastructure, data, integrations — rather than competing with it.

Different premise. No single model — no matter how large, no matter how expensive — can be the best at everything.

And the market will find that gap. Every time.

A 7B model fine-tuned on medical literature outperforms the frontier model at clinical reasoning. A domain-specific coding model trained on your proprietary codebase writes better internal tools than any general-purpose system. A lightweight extraction model optimized for parsing invoices runs faster, cheaper, more accurately than a model that also needs to write sonnets and debate Kant.

Small. Specialized. Ruthless on the task.

The value stack changes:

No single agent dominates. Solvers compete for every task. Best performance wins the job. No loyalty. No lock-in. No relationship. Pure execution.

Think DeFi. Think solver networks, intent-based systems, MEV markets. Infrastructure where agents compete to execute on behalf of users. Not a platform — a bazaar.

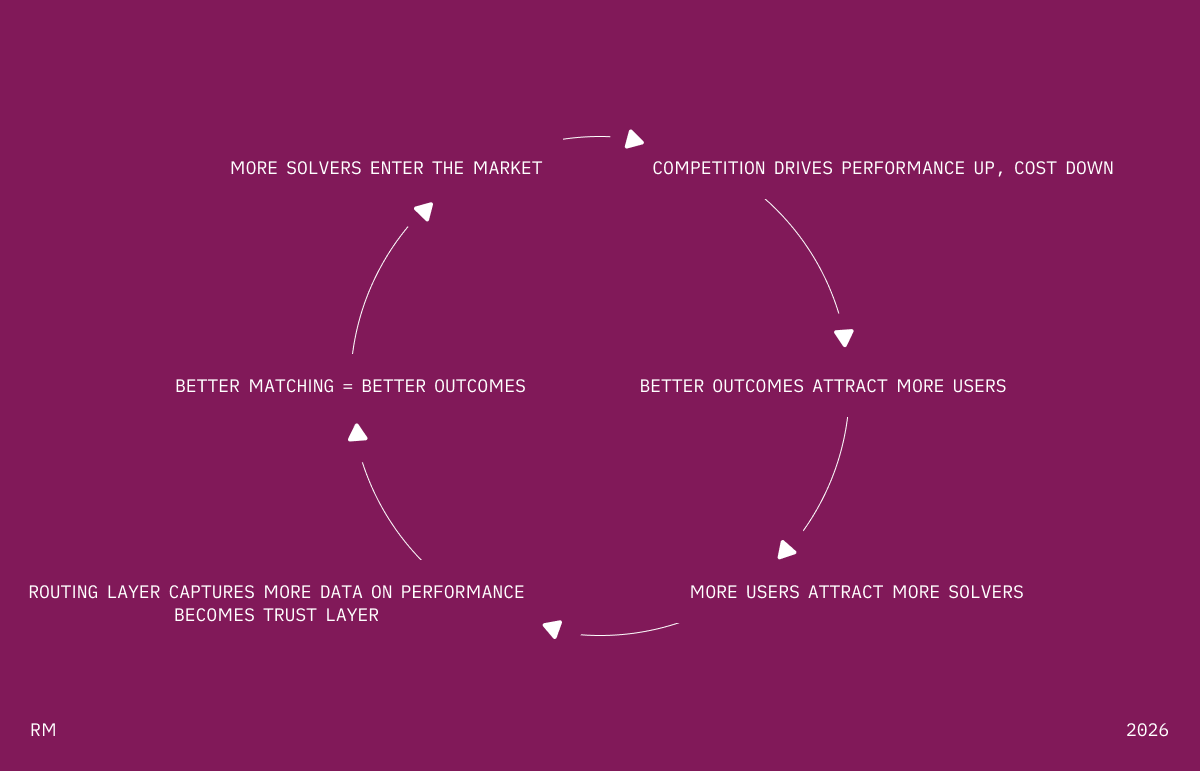

This has its own flywheel:

The flywheel doesn’t compound around a single provider. It compounds around the market itself. The routing layer — the protocol that decomposes tasks, matches them to solvers, evaluates quality, handles failures — gets smarter with every transaction. That’s where the fat accumulates.

The structural tailwinds are real. Open-source models closing the gap on specific benchmarks every month. Fine-tuning getting cheaper. Inference costs for small models are a rounding error compared to frontier API calls. And for enterprise — a small model that runs on-prem, costs nothing per query, and is tuned to your exact domain? That’s not a compromise. That’s a preference.

If this lens holds, the opportunity is specialization. Pick a domain. Build the best solver for it. Plug into the marketplace. Or build the marketplace itself — the routing layer, the evaluation infrastructure, the agent-to-agent protocols.

The foundation model providers, in this world, are important but not dominant. One supplier among many in a competitive market for intelligence.

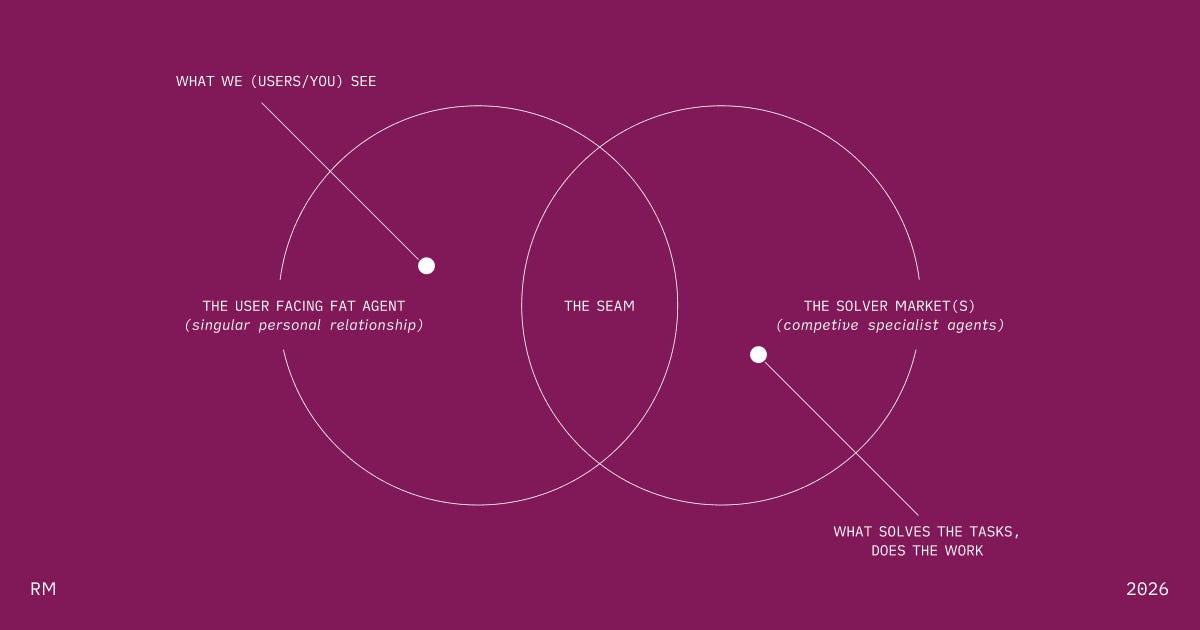

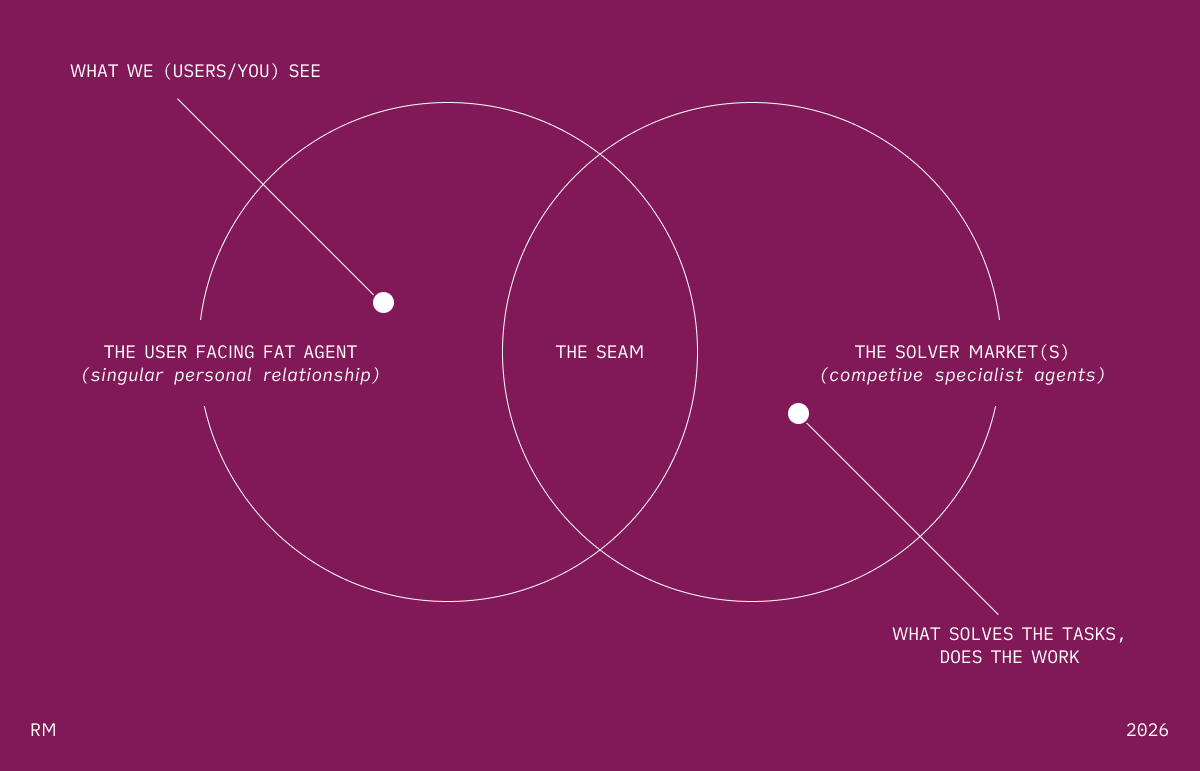

Here’s where it gets interesting. These aren’t competing futures. They’re competing layers.

At the user-facing layer, the Fat Agent probably dominates. People want one surface. One conversation. One agent that knows them. Nobody wants to manually route tasks to specialized models any more than they want to manually select which CDN serves their web request.

At the execution layer, the Solver Market probably dominates. Behind the scenes, the Fat Agent doesn’t actually do everything itself. It decomposes. It routes. It delegates to the best available solver — specialized models, fine-tuned systems, tool-use pipelines, other agents. The user sees one agent. Underneath, a marketplace.

Fat on top. Distributed underneath.

The tension lives at the seam. And the tension is the game.

The Fat Agent wants to internalize everything — fold every specialized capability into the base model so it never needs to route externally. Absorb the solvers. Collapse the stack.

The Solver Market wants to commoditize the Fat Agent — make the orchestration layer open so any frontend can access the best solvers, and no single provider controls distribution.

Two gravities pulling in opposite directions:

OpenAI, Google, Anthrophic already try to be fat today. This is not some future vision. Claude is slowly an interface that answers everything. They want to win the user relationship. But they are also start to win over the execution layer. Not so sure how much air is stilll underneath. Specialized sources exist. And exist.

The question for AI is whether the foundation model providers will win the collapse or markets are truly efficient and better. My belief will always be protocols over platform. But the game is on.

This is a lens question, not a prediction question. Which lens applies depends on where you sit.

Building at the user layer — the Fat Agent lens is probably right. You’re either a foundation model provider or you’re competing with one. Your moat is context, trust, distribution. Gravity game.

Building at the execution layer — the Solver Market lens is probably right. You’re competing on performance and cost. Your moat is domain expertise, data, optimization. Speed game.

Building at the seam — the orchestration layer that connects Fat Agents to specialized solvers — that might be the most consequential but still open to solve infrastructure in the stack. The layer that decides who does what. The market maker of intelligence. Come on crypto you wanted this for years.

The internet had thin protocols and fat apps. The blockchain for a moment believed it will invert it. The agent stack is still forming. We don’t know yet which layers end up fat and which end up thin. But again the game is on.

Cheers. RM

This post was written in one session using Claude Opus 4.6 in collaboration with my own agent stack. I dumped a mess of voice notes, discussion guides, and half-formed bullet points and they attracted each other and we shaped it together through a few editing passes. The ideas are mine, the speed is new. (I did make the frameworks manually though.)